StakaterPlatform

Overview

Stakater has developed a consolidated solution named StakaterPlatform that gives head-start to individuals and companies with a set of seven stacks containing opensource tools based on industry's recommendation and best practices for monitoring, logging, security, tracing, control, delivery and alerting of Kubernetes cluster.

End-users only need to focus on developing their application while everything else will be managed by StakaterPlatform. StakaterPlatform consist of 7 stacks.

Workflow

StakaterPlatform works on GitOps principle using an opensource tool Flux which makes sure that cluster is always in the desired state by tracking a git repository. To make changes in your cluster, commit your changes in the git repository and the changes will be reflected in the kubernetes cluster.

Prerequisites

- Kubernetes cluster with at least 8 VCPUS & 32 GB of RAM

- kubectl (between v1.11 & v1.15.3)

- helm (v2.15.0 or lower)

- A working domain (e.g.

stakaterplatform.com) - SSL Certificate for that domain

Install via local machine

- Duplicate this repository.

- Update configuration variables in

variables.configfile and provide the relevant values. - [Recommended but optional] To take full advantage of the tool stack configure Additional Variables as well.

- Ensure that correct context is set for kubectl & helm.

- Run

make configure, this will make all required substitutions based on configuration variables in the repository. When prompted commit those changes. - Add the public SSH key of flux(configs/flux.pub) to your Git repository with write access.

- Once changes are committed, run

make deploythis will deploy flux, which will in turn deploy StakaterPlatform via GitOps strategy. Estimated time for everything to be up and running is 5-10. - Use the printed dashboard token to access the Kubernetes dashboard at

dashboard-control.YOURDOMAINNAME - Visit

https://forecastle-control.YOURDOMAINNAMEand you'll be able to view all applications deployed by StakaterPlatform.

Note

Since variables.config file and configs/ directory contains private information those files are not being tracked in git and won't/shouldn't be committed. In case you want to commit those changes run make track-secrets.

Install via GitLab CI Pipeline

- Duplicate this repository in a GitLab account.

- Update configuration variables in

variables.configfile and provide the relevant values. - Create a Personal Access Token on GitLab and note down Personal Access Token (Available only when created)

- Configure CI/CD Environment variables in

<Your GitLab Project> -> Settings -> CI/CD. - Add Following variables for the pipeline

| Pipeline Variables | Description |

|---|---|

| KUBE_CONFIG | Base64 encoded KubeConfig of the kubernetes cluster you want to deploy on |

| REPO_ACCESS_TOKEN | Personal access token generated in Step 3 |

| TARGET | Make file target. Value: pipeline-deploy |

| AWS_ACCESS_KEY_ID | (Define only if CLOUD_PROVIDER is aws) AWS Access Key Id. |

| AWS_SECRET_ACCESS_KEY | (Define only if CLOUD_PROVIDER is aws) AWS Secret Access Key. |

- Add the public SSH key(Deploy Keys) to your GitLab account with write access printed at the end of pipeline logs.

- Once key is added, StakaterPlatform will deploy on your cluster (Estimated time: 10-15 minutes). 🎊 🎊

- Use the printed dashboard token to access the Kubernetes dashboard at

dashboard-control.DOMAIN

Verification

Locally

Run make verify to run tests to ensure that all the relevant endpoints are up and running.

GitLab CI

Run pipeline with Pipeline variable: TARGET = verify

Basic Configuration

| Variables | Description | Default |

|---|---|---|

| CLOUD_PROVIDER | Name of the k8s cloud provider | nil ( aws | azure) |

| DNS_PROVIDER | Cloud DNS Provider | aws (Route53) |

| EXTERNAL_DNS_AWS_ACCESS_KEY_ID | AWS Access Key Id having access to create/delete/update Route53 HostedZone entries | nil |

| EXTERNAL_DNS_AWS_SECRET_ACCESS_KEY | AWS Secret Access Key having access to create/delete/update Route53 HostedZone entries | nil |

| DOMAIN | Domain to use for StakaterPlatform | nil |

| BASE64_ENCODED_SSL_CERTIFICATE_CA_CRT | Base64 encoded Intermediate Certificate value | nil |

| BASE64_ENCODED_SSL_CERTIFICATE_TLS_CRT | Base64 encoded Server Certificate value | nil |

| BASE64_ENCODED_SSL_CERTIFICATE_TLS_KEY | Base64 encoded Certificate Key value | nil |

| STAKATER_PLATFORM_SSH_GIT_URL | SSH URL for your Github repo. | nil(e.g git@github.com/stakater/StakaterPlatform.git. Notice : is replaced with / in the URL ) |

| STAKATER_PLATFORM_BRANCH | Branch to use for STAKATER_PLATFORM_SSH_GIT_URL | master |

For generating certificates view: Creating & using custom SSL certificates

Detailed Configuration and Stack definitions

To take full advantage of our tool stack, it is recommended to have the following things set-up:

- 3 Slack channels with webhook's added. These are required for:

Dev-notifications: Notifications regarding up/down time and regarding new builds from jenkinsinfra-alerts: Alerts from infrastructureapps-alerts: Alerts for apps

- An account at (Uptime Robot)[https://uptimerobot.com/] for IMC to work and provide you with up/down time alerts. For other supported down time monitors visit Ingress Monitor Controller.

Global Variables

| Variables | Description | Default |

|---|---|---|

| CLOUD_PROVIDER | Name of the k8s cloud provider | nil ( aws | azure) |

| DNS_PROVIDER | Cloud DNS Provider | aws (Route53). Currently only Route53 is supported. |

| EXTERNAL_DNS_AWS_ACCESS_KEY_ID | AWS Access Key Id | nil |

| EXTERNAL_DNS_AWS_SECRET_ACCESS_KEY | AWS Secret Access Key | nil |

| DOMAIN | Domain to expose StakaterPlatform | nil |

| BASE64_ENCODED_SSL_CERTIFICATE_CA_CRT | Base64 encoded Intermediate Certificate value | nil |

| BASE64_ENCODED_SSL_CERTIFICATE_TLS_CRT | Base64 encoded Server Certificate value | nil |

| BASE64_ENCODED_SSL_CERTIFICATE_TLS_KEY | Base64 encoded Certificate Key value | nil |

| STAKATER_PLATFORM_SSH_GIT_URL | SSH URL for your Github repo. | nil(e.g git@github.com/stakater/StakaterPlatform.git. Notice : is replaced with / in the URL ) |

| STAKATER_PLATFORM_BRANCH | Branch to use for STAKATER_PLATFORM_SSH_GIT_URL | master |

KeyCloak

| Variables | Description | Default |

|---|---|---|

| KEYCLOAK_CLIENT_ID | Client ID for KeyCloak Gatekeeper | stakater-online-platform |

| KEYCLOAK_CLIENT_SECRET | Client Secret for KeyCloak Gatekeeper | 1de22f11-2068-df5h-9418-dawzxqwe2 |

| KEYCLOAK_DEFAULT_USERNAME | Username for the default user created | stakater-user |

| KEYCLOAK_DEFAULT_PASSWORD | Password for the default user created | zUPaZnEfmYpcdd6X |

| KEYCLOAK_DB_USER | KeyCloak DB (Postgresql) username | admin |

| KEYCLOAK_DB_PASSWORD | KeyCloak DB (Postgresql) password | L3VT3hBysLGtsJaZ |

| KEYCLOAK_PASSWORD | Password for the admin user stakater | vuHBBDaVsS35jvF9 |

IngressMonitorController IMC

| Variables | Description | Default |

|---|---|---|

| IMC_API_KEY | API key of the monitor service provider | nil |

| IMC_ALERT_CONTACTS | Alert contacts for the monitor service provider | nil |

Nexus

| Variables | Description | Default |

|---|---|---|

| NEXUS_ADMIN_ACCOUNT_USER | Username for admin account | nexus-admin |

| NEXUS_ADMIN_ACCOUNT_PASSWORD | Password for admin account | L8TcnrwMytCFpAFe |

| NEXUS_CLUSTER_ACCOUNT_USER | Username for cluster admin account | nexus-cluster-admin |

| NEXUS_CLUSTER_ACCOUNT_PASSWORD | Password for cluster admin account | mrzUGWrD9buDYhMF |

Jenkins

| Variables | Description | Default |

|---|---|---|

| JENKINS_NOTIFICATIONS_SLACK_CHANNEL | Slack channel name to notify for jenkins pipeline result | OPTIONAL (e.g. #slack-channel-name) |

| JENKINS_NOTIFICATIONS_SLACK_WEBHOOK_URL | Slack webhook URL to notify for jenkins pipeline result | OPTIONAL |

| JENKINS_PIPELINE_GITHUB_TOKEN | GitHub API token. If configured Pipeline will comment on Pull Requests | OPTIONAL |

| JENKINS_PIPELINE_GITLAB_TOKEN | GitLab API token. If configured Pipeline will comment on Pull Requests | OPTIONAL |

| JENKINS_PIPELINE_BITBUCKET_TOKEN | BitBuckt API token. If configured Pipeline will comment on Pull Requests | OPTIONAL |

| JENKINS_DOCKER_MAVEN_USERNAME | Maven repository used by Jenkins to store maven artifiacts | admin |

| JENKINS_DOCKER_MAVEN_PASSWORD | Maven repository used by Jenkins to store maven artifiacts | xJuAWjG4GzrCkPJU |

| JENKINS_LOCAL_NEXUS_USERNAME | Local repository used by Jenkins to store artifiacts | admin |

| JENKINS_LOCAL_NEXUS_PASSWORD | Local repository used by Jenkins to store artifiacts | LXwEkC4jZzQj3DHY |

| JENKINS_NEXUS_USERNAME | Nexus Username. Used by docker command in Jenkins Pipeline | admin |

| JENKINS_NEXUS_PASSWORD | Nexus Password. Used by docker command in Jenkins Pipeline | CN3B6uY998kpBFDd |

AlertManager

| Variables | Description | Default |

|---|---|---|

| SLACK_INFRA_ALERTS_CHANNEL | Slack channel name to send Alertmanager infrastructure alerts | #stakater-platform-infra-alerts |

| SLACK_INFRA_ALERTS_WEBHOOK_URL | Slack channel webhook URL to send Alertmanager infrastructure alerts | OPTIONAL |

| SLACK_APPS_ALERTS_CHANNEL | Slack channel webhook URL to send Alertmanager application alerts | #stakater-platform-apps-alerts |

| SLACK_APPS_ALERTS_WEBHOOK_URL | Slack channel name to send Alertmanager application alerts | OPTIONAL |

Grafana

| Variables | Description | Default |

|---|---|---|

| GRAFANA_USERNAME | Grafana dashboard username | admin |

| GRAFANA_PASSWORD | Grafana dashboard password | CN3B6uY998kpBFDd |

Flux

In directory configs, SSH keys flux(private) & flux.pub(public) exist which flux uses for GitOps. You can provide, paste content of, your own SSH keys there. Else, a new unique key pair will be generated every time for you.

Uninstall

Run make destroy to remove StakaterPlatform from your cluster.

Production Configuration and Hardening

- Change default usernames and passwords for all tools (

variables.config) - Add your own SSH keys for flux

- Use Identity Providers (e.g. Google, Active Directory etc.) and configure keyCloak to use that

- Replace all secrets with sealed-secrets. Stakater Workshop - Sealed Secrets

- Use keycloak for SSO over all exposed applications

Stacks

Control Stack

Overview

This stack provides tools that manage the components of StakaterPlatform.

Tools Introduction

- External DNS

ExternalDNS synchronizes exposed Kubernetes Services and Ingresses with DNS providers. ExternalDNS makes Kubernetes resources discoverable via public DNS servers. Like KubeDNS, it retrieves a list of resources (Services, Ingresses, etc.) from the Kubernetes API to determine a desired list of DNS records. Unlike KubeDNS, however, it's not a DNS server itself, but merely configures other DNS providers accordingly.

- Nginx Ingress

nginx-ingress is an Ingress controller that uses ConfigMap to store the nginx configuration

- Descheduler

In Kubernetes the Kubernetes Scheduler component schedule pods on nodes based on the defined policy. Pods can be scheduled on other nodes due to following reasons:

Node utilization:Pods will be scheduled to other nodes if a node isunderoroverutilized.Scheduling Policies:Scheduling policies like taints, labels and node/pod affinity rules are changed.Node Failure:A Node is failed its pods will be scheduled to other availble node in the cluster.Node Addition: A node is added in the cluster then pods from the other nodes that are over utilized will be scheduled to the new node.

Deschedular based on its policy can move and evict pods but it doesn't reschedule them. Pods rescheduling is done by Kubernetes Scheduler.

- Forecastle

Forecastle is a control panel which dynamically discovers and provides a launchpad to access applications deployed on Kubernetes.

- Reloader

Reloader watch changes in ConfigMap and Secret and do rolling upgrades on Pods with their associated DeploymentConfigs, Deployments, Daemonsets and Statefulsets.

- Kubernetes Dashboard

Kubernetes Dashboard is a general purpose, web-based UI for Kubernetes clusters. It allows users to manage applications running in the cluster and troubleshoot them, as well as manage the cluster itself.

- GitWebhookProxy

Gitwebhookproxy is a proxy to let webhooks to reach a Jenkins instance running behind a firewall. Jenkins is awesome and matchless tool for both CI & CD; but unfortunately its a gold mine if left in wild with wide open access; so, we always want to put it behind a firewall. But when we put it behind firewall then webhooks don't work anymore, so we at stakater use gitwebhookproxy to bypass the firewall and trigger pipelines in jenkins.

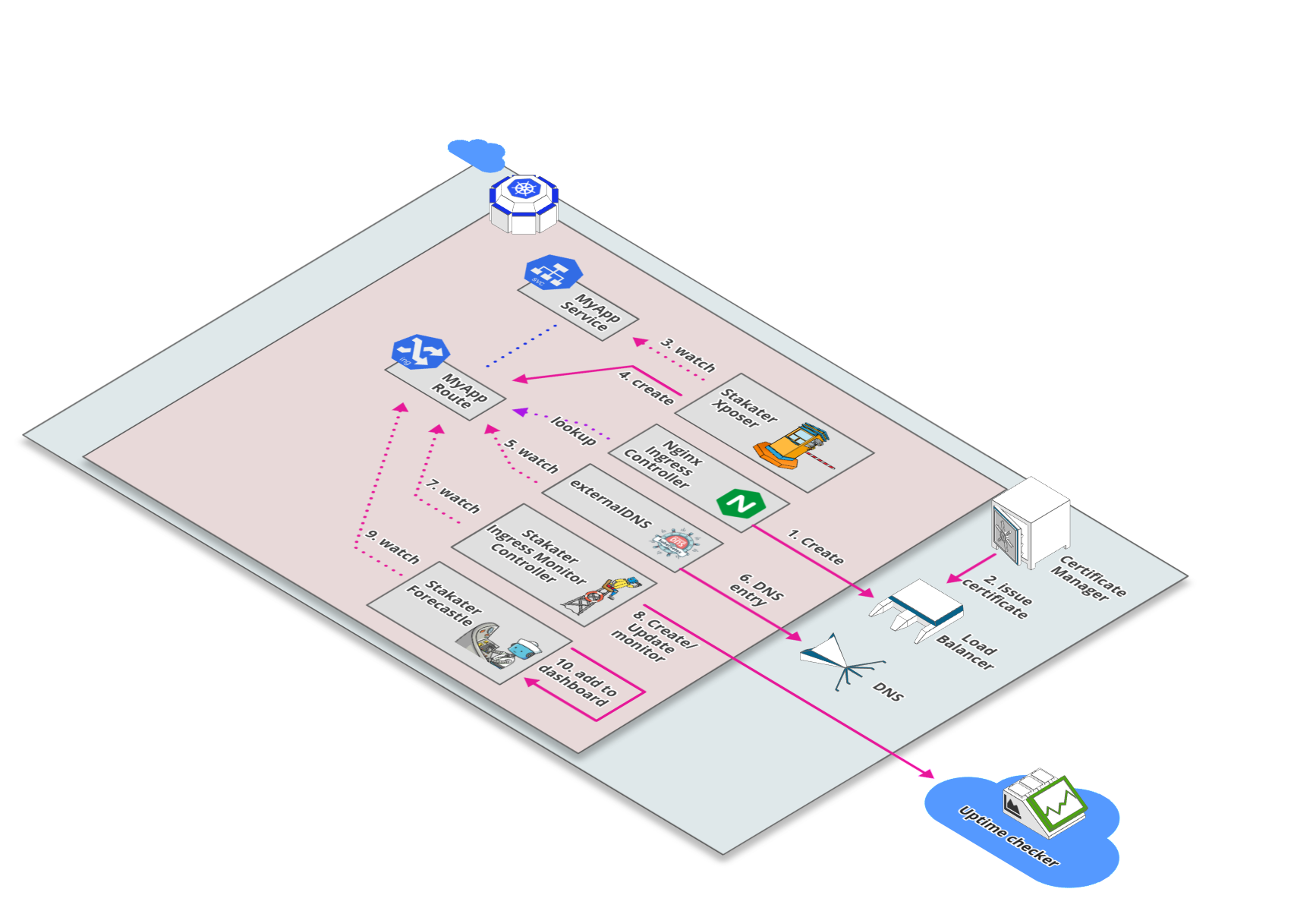

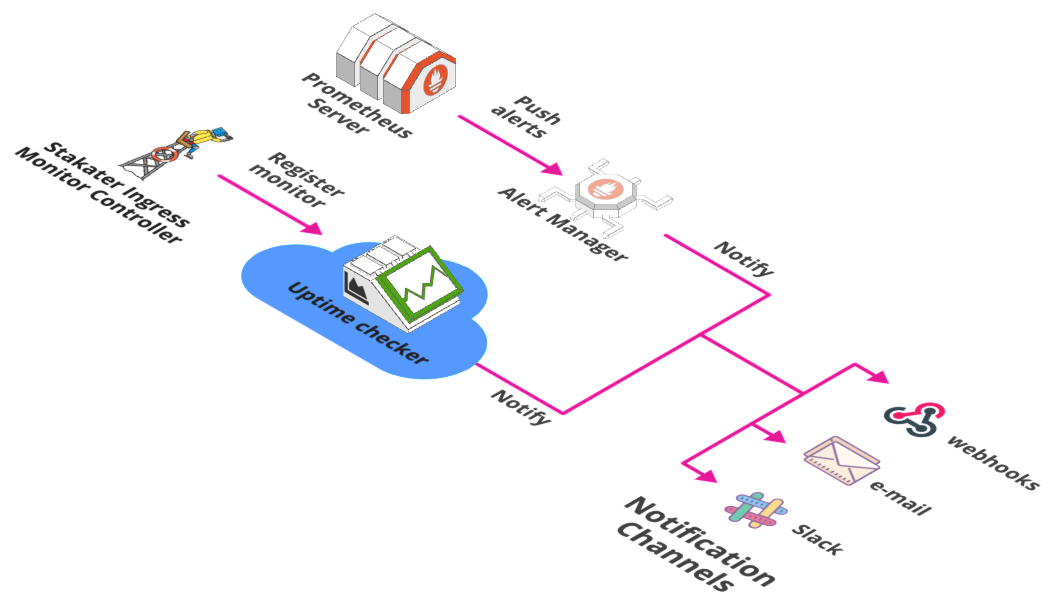

- Ingress Monitor Controller

A Kubernetes/Openshift controller to watch ingresses/routes and create liveness alerts for your apps/microservices in Uptime checkers.

- Xposer

Xposer watch all services running in a cluster; Creates, Updates, Deletes Ingresses and uses certmanager to generate TLS certificates automatically based on some annotations.

Tools Matrix

| Tool | Chart Repository | Cherry Pickable | SSO | Pre-Requisites |

|---|---|---|---|---|

| External DNS | Public | Yes | N/A | None |

| Nginx Ingress | Public | Yes | N/A | None |

| Descheduler | Stakater | Yes | N/A | None |

| Forecastle | Stakater | Yes | Yes | Keycloak |

| Reloader | Stakater | Yes | N/A | None |

| K8s Dashboard | Public | Yes | Depends | None |

| Gitwebhookproxy | Stakater | Yes | N/A | None |

| IMC | Stakater | Yes | N/A | None |

| Xposer | Stakater | Yes | N/A | None |

Default Configurations

Following are the default configurations that are used by StakaterPlatform for Control stack. These configurations can be found under config/ directory in the StakaterPlatform Github repository

IngressMonitorController IMC

IMC supports multiple online monitoring services. By default UptimeRobot is configured. Provide IMC_API_KEY and IMC_ALERT_CONTACTS in variables.config to monitor every ingress via UptimeRobot.

| Variables | Description | Default |

|---|---|---|

| IMC_API_KEY | API key of the monitor service provider | nil |

| IMC_ALERT_CONTACTS | Alert contacts for the monitor service provider | nil |

For more details See IMC Github Repository

configs/imc.yaml:

# For more details: https://github.com/stakater/IngressMonitorController

providers:

- name: UptimeRobot

apiKey: IMC_API_KEY

apiURL: https://api.uptimerobot.com/v2/

alertContacts: "IMC_ALERT_CONTACTS"

enableMonitorDeletion: true

monitorNameTemplate: "{{.IngressName}}-{{.Namespace}}"

resyncPeriod: 0 # how often (in seconds) monitors should be synced to their Kubernetes resources (0 = disabled)

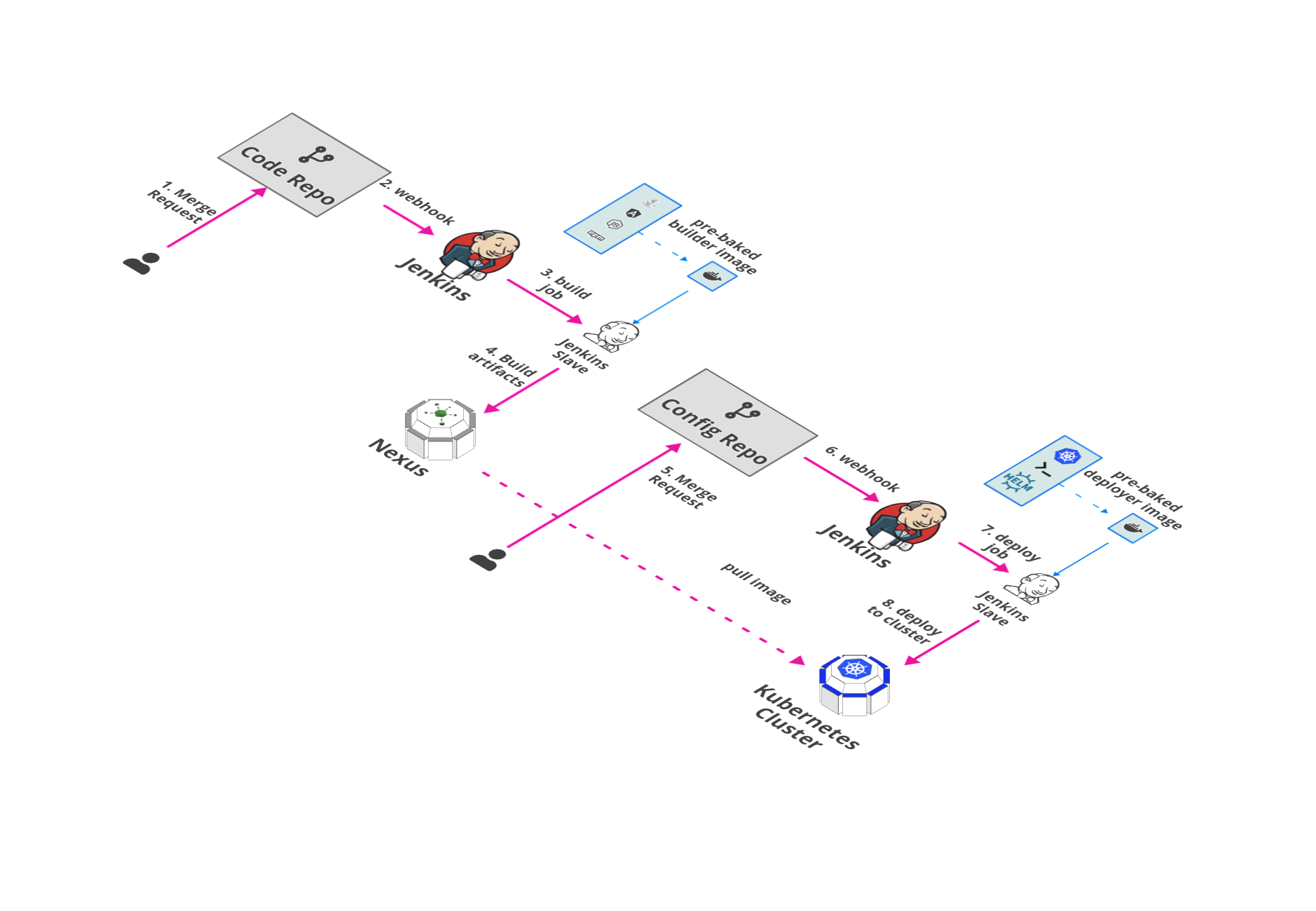

Delivery Stack

Overview

Delivery Stack is responsible for CI/CD and artifacts management of pipelines.

Tools Introduction

- Jenkins

We at Stakater use Jenkins as our continuous integration, continuous development and continuous testing server. Jenkins is a self-contained, open source automation server which can be used to automate all sorts of tasks related to building, testing, and delivering or deploying software.

- Sonatype-Nexus

Nexus is a repository manager that can store and manage components, build artifacts, and release candidates in one central location. At stakater, we use nexus to store docker images of our prod application like stakaterfrontend and emailservice etc.

Tools Matrix

| Tool | Chart Repository | Cherry Pickable | SSO | Pre-Requisites |

|---|---|---|---|---|

| Jenkins | Public | Yes | Yes | KeyCloak |

| Sonatype-Nexus | Public | Yes | No | None |

| RDLM | Stakater | Yes | N/A | None |

Default Configurations

Following are the default configurations that are used by StakaterPlatform for Delivery stack. These configurations can be found under configs/ directory in the StakaterPlatform Github repository

Jenkins

Jenkins provide automation pipelines for CI/CD workflow.

Maven Configs

Following variables should be configured in variables.config for Caching maven artifacts and speeding up maven builds.

| Variables | Description | Default |

|---|---|---|

| JENKINS_DOCKER_MAVEN_USERNAME | Maven repository used by Jenkins to store maven artifiacts | admin |

| JENKINS_DOCKER_MAVEN_PASSWORD | Maven repository used by Jenkins to store maven artifiacts | xJuAWjG4GzrCkPJU |

| JENKINS_LOCAL_NEXUS_USERNAME | Local repository used by Jenkins to store artifiacts | admin |

| JENKINS_LOCAL_NEXUS_PASSWORD | Local repository used by Jenkins to store artifiacts | LXwEkC4jZzQj3DHY |

| JENKINS_NEXUS_USERNAME | Nexus Username. Used by docker command in Jenkins Pipeline | admin |

| JENKINS_NEXUS_PASSWORD | Nexus Password. Used by docker command in Jenkins Pipeline | CN3B6uY998kpBFDd |

configs/jenkins-maven-config.xml:

<settings>

<!-- sets the local maven repository outside of the ~/.m2 folder for easier mounting of secrets and repo -->

<localRepository>${user.home}/.mvnrepository</localRepository>

<mirrors>

<mirror>

<id>nexus</id>

<mirrorOf>external:*</mirrorOf>

<url>http://nexus.release/repository/public/</url>

</mirror>

</mirrors>

<!-- lets disable the download progress indicator that fills up logs -->

<interactiveMode>false</interactiveMode>

<servers>

<server>

<id>docker-delivery.DOMAIN:443</id>

<username>JENKINS_DOCKER_MAVEN_USERNAME</username>

<password>JENKINS_DOCKER_MAVEN_PASSWORD</password>

</server>

<server>

<id>local-nexus</id>

<username>JENKINS_LOCAL_NEXUS_USERNAME</username>

<password>JENKINS_LOCAL_NEXUS_PASSWORD</password>

</server>

<server>

<id>nexus</id>

<username>JENKINS_NEXUS_USERNAME</username>

<password>JENKINS_NEXUS_PASSWORD</password>

</server>

<server>

<id>oss-sonatype-staging</id>

<username></username>

<password></password>

</server>

</servers>

<profiles>

<profile>

<id>nexus</id>

<properties>

<altDeploymentRepository>local-nexus::default::http://nexus.release/repository/maven-releases/</altDeploymentRepository>

<altReleaseDeploymentRepository>local-nexus::default::http://nexus.release/repository/maven-releases/</altReleaseDeploymentRepository>

<altSnapshotDeploymentRepository>local-nexus::default::http://nexus.release/repository/maven-snapshots/</altSnapshotDeploymentRepository>

</properties>

<repositories>

<repository>

<id>central</id>

<url>http://central</url>

<releases><enabled>true</enabled></releases>

<snapshots><enabled>true</enabled></snapshots>

</repository>

</repositories>

<pluginRepositories>

<pluginRepository>

<id>central</id>

<url>http://central</url>

<releases><enabled>true</enabled></releases>

<snapshots><enabled>true</enabled></snapshots>

</pluginRepository>

</pluginRepositories>

</profile>

<profile>

<id>release</id>

<properties>

<gpg.executable>gpg</gpg.executable>

<gpg.passphrase>mysecretpassphrase</gpg.passphrase>

</properties>

</profile>

</profiles>

<activeProfiles>

<!--make the profile active all the time -->

<activeProfile>nexus</activeProfile>

</activeProfiles>

</settings>

Jenkins docker cfg

Jenkins use docker to build images for CI/CD pipleline. To push and pull images from image registry ( Nexus in StakaterPlatform) docker configuration file needs to be provided. This configuration file corresponds to $HOME/.docker/config.json. Configure following varaibles in variables.config to allow pull/push from nexus repository in Jenkins

| Variables | Description | Default |

|---|---|---|

| JENKINS_NEXUS_USERNAME | Nexus Username. Used by docker command in Jenkins Pipeline | admin |

| JENKINS_NEXUS_PASSWORD | Nexus Password. Used by docker command in Jenkins Pipeline | CN3B6uY998kpBFDd |

JENKINS_NEXUS_AUTH is replaced by encoding above variables in base64 in this format: NEXUS_ADMIN_ACCOUNT_USER:NEXUS_ADMIN_ACCOUNT_PASSWORD

configs/jenkins.json:

{

"auths": {

"docker-delivery.DOMAIN:443": {

"auth": "JENKINS_NEXUS_AUTH"

}

}

}

Nexus

Nexus is used as a private image repository to push/pull images and artifacts. Configure following varaibles in variables.config to configure nexus registry.

| Variables | Description | Default |

|---|---|---|

| NEXUS_ADMIN_ACCOUNT_USER | Username for admin account | nexus-admin |

| NEXUS_ADMIN_ACCOUNT_PASSWORD | Password for admin account | L8TcnrwMytCFpAFe |

| NEXUS_CLUSTER_ACCOUNT_USER | Username for cluster admin account | nexus-cluster-admin |

| NEXUS_CLUSTER_ACCOUNT_PASSWORD | Password for cluster admin account | mrzUGWrD9buDYhMF |

configs/nexus-admin-account.json:

{"name": "NEXUS_ADMIN_ACCOUNT_USER","type": "groovy","content": "security.addUser('NEXUS_ADMIN_ACCOUNT_USER', 'Stakater', 'Admin', 'user@gmail.com', true, 'NEXUS_ADMIN_ACCOUNT_PASSWORD', ['nx-admin'])"}

configs/nexus-cluster-account.json:

{"name": "NEXUS_CLUSTER_ACCOUNT_USER","type": "groovy","content": "security.addRole('cluster', 'cluster', 'User with privileges to allow read access to repo content and healtcheck', ['nx-healthcheck-read','nx-repository-view-docker-stakater-docker-browse','nx-repository-view-docker-stakater-docker-read','nx-search-read'], ['nx-anonymous']); security.addUser('NEXUS_CLUSTER_ACCOUNT_USER', 'Cluster', 'Cluster', 'user@gmail.com', true, 'NEXUS_CLUSTER_ACCOUNT_PASSWORD', ['cluster'])"}

Storage Details

| Tool | PVC | Recommended Space | | :-------------------: | :------------------------------------------------------------------------------: | :--------------😐 :--😐 :-------------😐 | Jenkins | stakater-delivery-jenkins | 8Gi | | Sonatype-Nexus | stakater-delivery-sonatype-nexus-data-big | 80Gi |

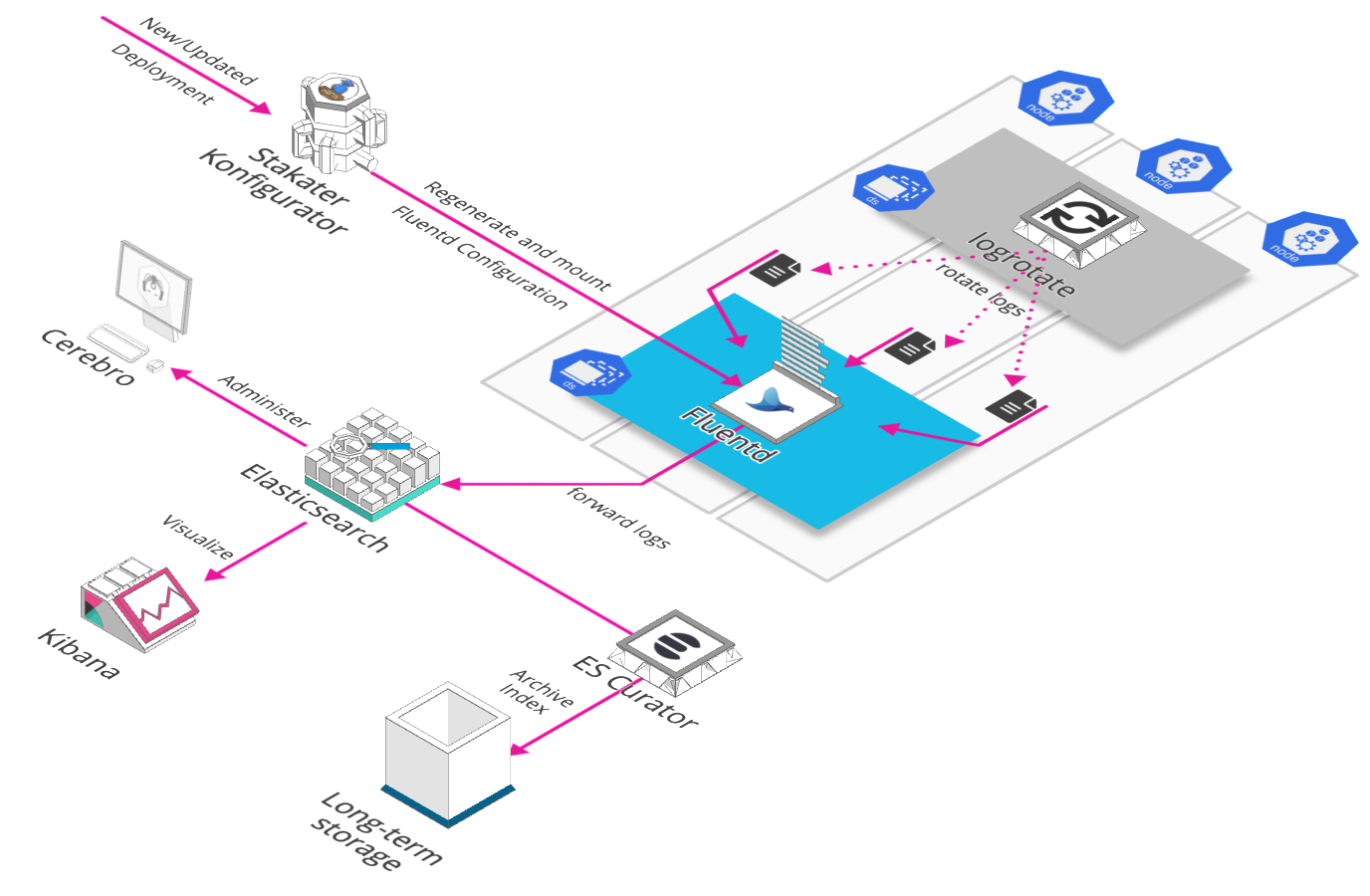

Logging Stack

Overview

Logging is one of the pillars of observability. In the simplest sense logging is about recording discrete events. An monitoring these logs is important as they provide detailed information about events and errors that are useful to understand the flow of events that occurs in the application, and where and why the normal flow gets disrupted in case of an error. Application logs are usually generated with different levels of severity such as ERROR, WARN, INFO, etc. which are useful to indicate what the impact of the logged event is, and the level of attention that should be given to it. Apart from application logs, it is also prudent to monitor logs from the rest of the application environment such as middleware, application servers, and also system logs. This will help provide a complete picture not just confined to the application. And therefore a system level issue impacting the application may also be captured and observed.

At Stakater we use open source tools for internal use as well as our customer implementations, and for log monitoring our stack of choice is EFK (short for Elasticsearch, Fluentd and Kibana). EFK. While Elasticsearch and Kibana are used to index, search and visualize the logs, Fluentd is the log aggregator, essentially going door to door and collecting all the logs that are configured to be collected.

Details

- Log File Generation

Let’s first take a look at how a log file is generated on the cluster. All applications are run in docker containers, so how they generate logs is important. At Stakater we follow the best practice of writing our logs to STDOUT stream in the container. This is compliant with the twelve-factor app principle in that, the application running within the container should not concern itself with how to write, buffer or store the logs. It should simply write its stream of logs to STDOUT. It should be the responsibility of the execution environment to capture, process and route the logs in whichever way is suitable. Also, we do not need to separately add volume mounts to map our log files outside of the container to have them be processed, which would add the path of the log file into the equation. Should there be any change in the file path in future, you will need to handle that in volume mount and maybe even any downstream system. Another benefit of writing to STDOUT is that the logs are automatically captured by docker logs, which is not the case for logs that are not written to STDOUT, such as to a custom log file. Docker of course captures these logs based on the logging driver that it is configured with. Using the default json driver means that the logs are stored in json format on disk in a specific file path.

In case you are using a third party application or tool which writes its logs to a log file rather than STDOUT, you need not worry. There is always a workaround, and one suitable workaround for this is adding a symbolic link (symlink) from the log file to /dev/stdout. An example of this is Nginx, where the server itself writes its logs to a logfile, but the officially provided dockerfile adds the symlink.

Outside of the containers, Kubernetes creates symlinks to the docker log files at /var/log/containers/*.log on the Node. We can therefore easily locate and capture logs within our fluentd daemonset.

We do not handle persistent storage of log files at this point since we have our fluentd daemonset forwarding the logs in real-time. Persistence of the log files will handled at a later point in the logging flow as we will see soon.

- Logging Flow

FluentdFluentd is an open source data collector for a unified logging layer. It has a flexible plugin architecture, allowing easy extension of its functionality. We deploy it as a DaemonSet on our cluster to ensure that all Nodes run a copy of the fluentd Pod. Any new node that is added to the cluster will also get a fluentd pod automatically.

The fluentd input plugin has the responsibility for reading in data from these log sources, and generating a Fluentd event against it. We use the in_tail Input plugin which allows Fluentd to read events from the tail of text files. This position from where fluentd has read a particular log file is recorded in a position file. And next time fluentd will pick up reading from this position in the file. The input is parsed, based on the configuration provided to the input plugin.

The fluentd event contains information such as where an event comes from, the time of the event, and the actual log content. We use the kubernetes_metadata_filter plugin to enrich the log event with kubernetes pod and namespace metadata. The plugin gets basic metadata about the container that emitted a given log record using the source of the log record.

These log events are matched to an Output plugin type in the Fluentd configuration. We use the out_elasticsearch Output plugin to forward these records to Elasticsearch.

Konfigurator

It is a kubernetes operator that can dynamically generate app configuration when kubernetes resources change. It uses KonfiguratorTemplate resource type to inject configurations on a specified path inside fluentd daemonset's container.

apiVersion: konfigurator.stakater.com/v1alpha1

kind: KonfiguratorTemplate

metadata:

name: fluentd

namespace: logging

labels:

app: konfigurator

spec:

renderTarget: ConfigMap

app:

name: stakater-logging-fluentd

kind: DaemonSet

volumeMounts:

- mountPath: /etc/fluent

container: stakater-logging-fluentd

templates:

fluent.conf: |

<add fluentd configurations here>

Eventrouter

A kubernetes service that forwards events to a sink. Events are an essential part of a cluster and provide great insight to your cluster state, eventrouter makes it easy to persist those events in user specified sink i.e. stdout, AWS S3 etc. By default kube-apiserver has a -event-ttl flag set to 1 hour and it retains events for that specific amount of time. Since, we use elasticsearch as the persistence layer of our logging stack all we do is print logs from event-router to STDOUT and those logs are then picked up Fluentd and forwarded to elasticsearch.

Logrotate

Log rotation essentially renames the old log file, and creates a new log file in its place for continued capturing of logs. This is done so that the file size remains manageable. At Stakater we use the linux logrotate utility for this, also as a daemonset so that it runs on every node like fluentd. At the moment there isn’t a public chart available in the official helm repository, we are using our own which you can find here.

We can see an example configuration as follows. The schedule is maintained through cron and the rest of the parameters such as path and size of file, number of rotations, etc. are specified in the configuration:

environment:

cronSchedule: 0 */12 * * *

config:

k8sRotatorConf: |-

/var/lib/docker/containers/*/*.log {

rotate 5

copytruncate

missingok

notifempty

compress

maxsize 200M

daily

create 0644 root root

}

Elasticsearch

Elasticsearch is basically a NoSQL database that became popular in the Logging domain as part of the ELK/ElasticStack. As we saw before, Fluentd forwards the log streams to Elasticsearch, which then goes on to index and store the log information. Elasticsearch basically allows us to easily search logs based on the metadata that was added by Fluentd. We can easily get results from its REST API to look at logs of a particular application, or apps on a particular node, or even search for all ERROR logs that may have been generated throughout the cluster. A RESTful api however is not a user friendly way of viewing log information, and can also be restricting to one query at a time. A more powerful use of this is to be able to see the log data in terms of trends, and also aggregations. This is where Kibana comes in as we will see in a subsequent section.

With Elasticsearch we would like to have persistent storage, so that our log data and indices are safe in case of a server crash. We there back the Elasticsearch deployment with a Persistent Volume Claim (PVC) on Kubernetes. This way the logs are persisted across Node restarts.

ES Curator

We may also like to archive our Elasticsearch indices instead of having them all maintained since the beginning of time in our EBS. Old logs may not be needed and we can easily move them to a cheaper form of storage, such as an AWS S3 bucket with glacial storage. In such cases we use ES Curator. It helps us to curate our Elasticsearch indices. Following is an example configuration of how the curator cron schedule and other properties can be configured.

config:

cronSchedule: 0 0 * * *

esHost: elasticsearch

esPort: 9200

snapshotName: stackator-es

bucketName: 12345678912-stakater-store

bucketBasePath: /es-indices-snapshots/

awsRegion: us-east-2

timeUnit: days

olderThan: 30

loglevel: INFO

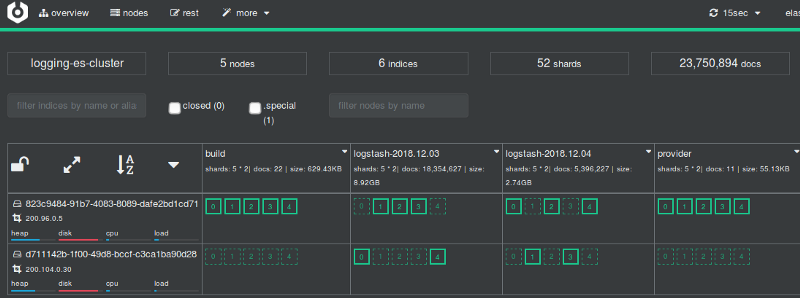

Cerebro

Cerebro is a tool we use for web administration of Elasticsearch. It can be useful to get a web view of the elasticsearch nodes, indices, shards, resource usage etc.

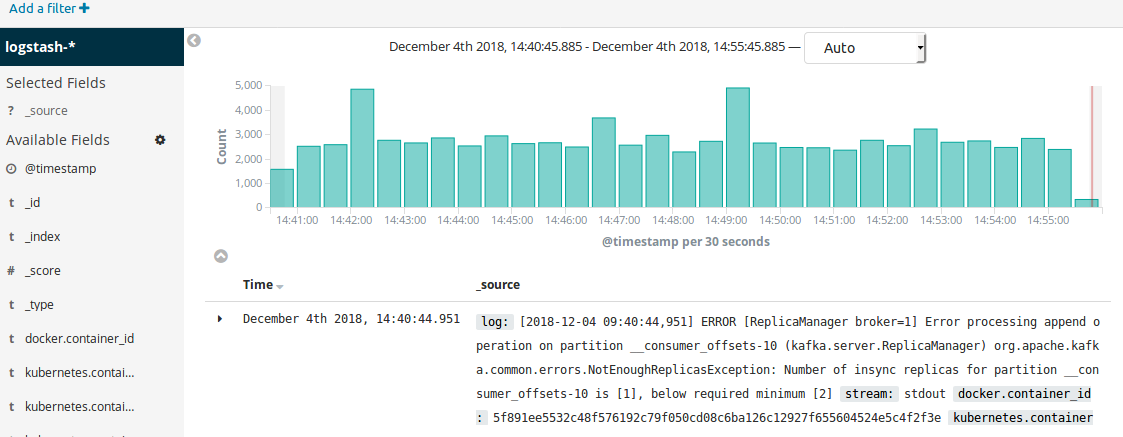

Kibana

Kibana, is a visualization tool which helps us to view queries on Elasticsearch. We can view the text log data as-is, filter and query it based on certain labels to view just a subset, and we can also view it in the form of a chart, graph or other visualization. Kibana comes with some visualizations out of the box, and we can also build custom visualizations and dashboards as per our requirement. Visualizations can be helpful in indicating trends such as number of warning or error logs throughout the day time, which could be correlated to changing server load or other factors. Such analyses can then help identify issues pro-actively.

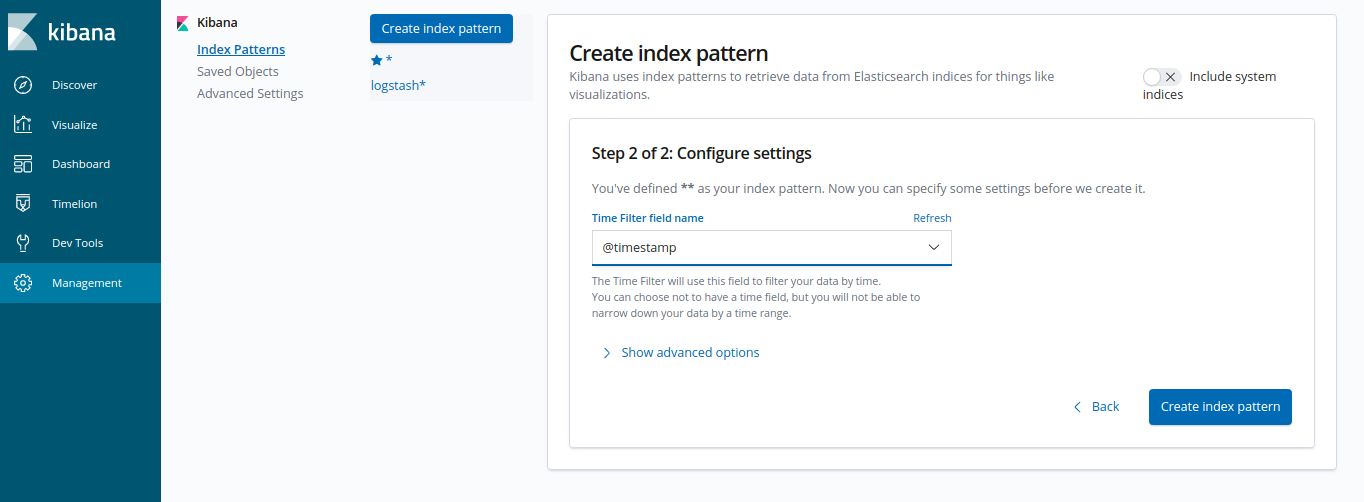

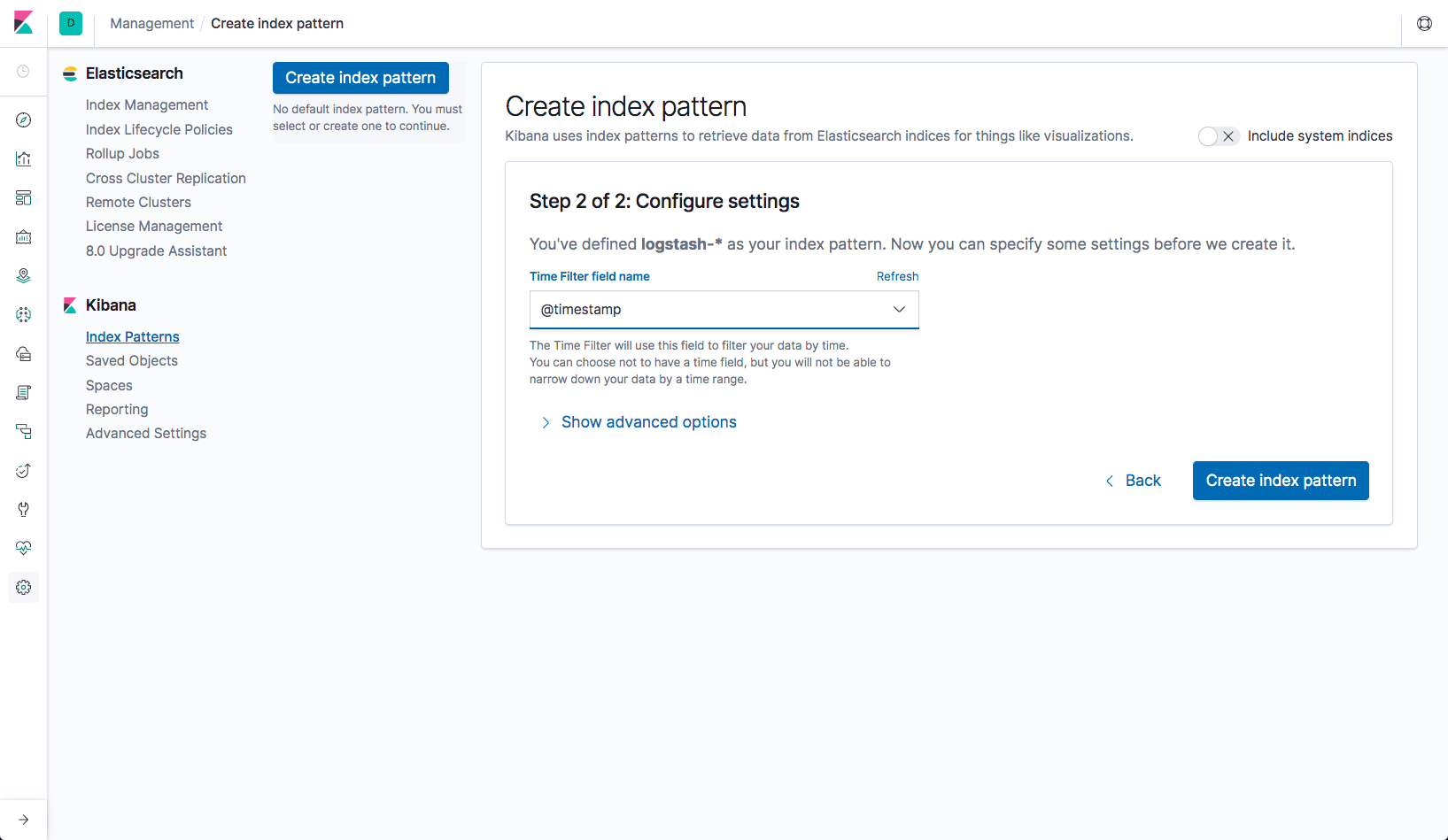

- Configuring Kibana

At first start, before visualizing logs, Kibana needs to be configured with an index pattern for elasticsearch indices.

At first startup kibana will automatically redirect you for index creation. Create an index from Management -> Index Patterns -> Create Index Pattern

Create Index Patterns and name the index

*

Click "Next" and set

@timestampas the Time filter field name

Once index pattern is created kibana will take a few seconds to fetch data from ES and after that you'll start seeing logs in kibana.

Tools Matrix

| Tool | Chart Repository | Cherry Pickable | SSO | Pre-Requisites |

|---|---|---|---|---|

| Elasticsearch | Public | Yes | N/A | None |

| Fluentd | Public | No | N/A | Elasticsearch |

| Eventrouter | Public | No | N/A | None |

| Kibana | Public | No | Yes | KeyCloak, Elasticsearch |

| Cerebro | Public | No | Yes | KeyCloak, Elasticsearch |

| Elasticsearch Curator | Public | No | N/A | Elasticsearch |

| Logrotate | Stakater | Yes | N/A | None |

| Konfigurator | Stakater | No | N/A | Fluentd |

Storage Details

| Tool | PVC | Recommended Space | | :-------------------: | :------------------------------------------------------------------------------: | :--------------😐 :--😐 :-------------😐 | Elasticsearch | PVC details | - | | - | elasticsearch-master-elasticsearch-master-0 | 8Gi | | - | elasticsearch-data-elasticsearch-data-0 | 20Gi | | - | elasticsearch-data-elasticsearch-data-1 | 20Gi |

Details: Our recommended configuration for elasticsearch, used in StakaterPlatform, is to have 1 master node, 1 client node and 2 data nodes. Persistence should be enabled for master and data nodes.

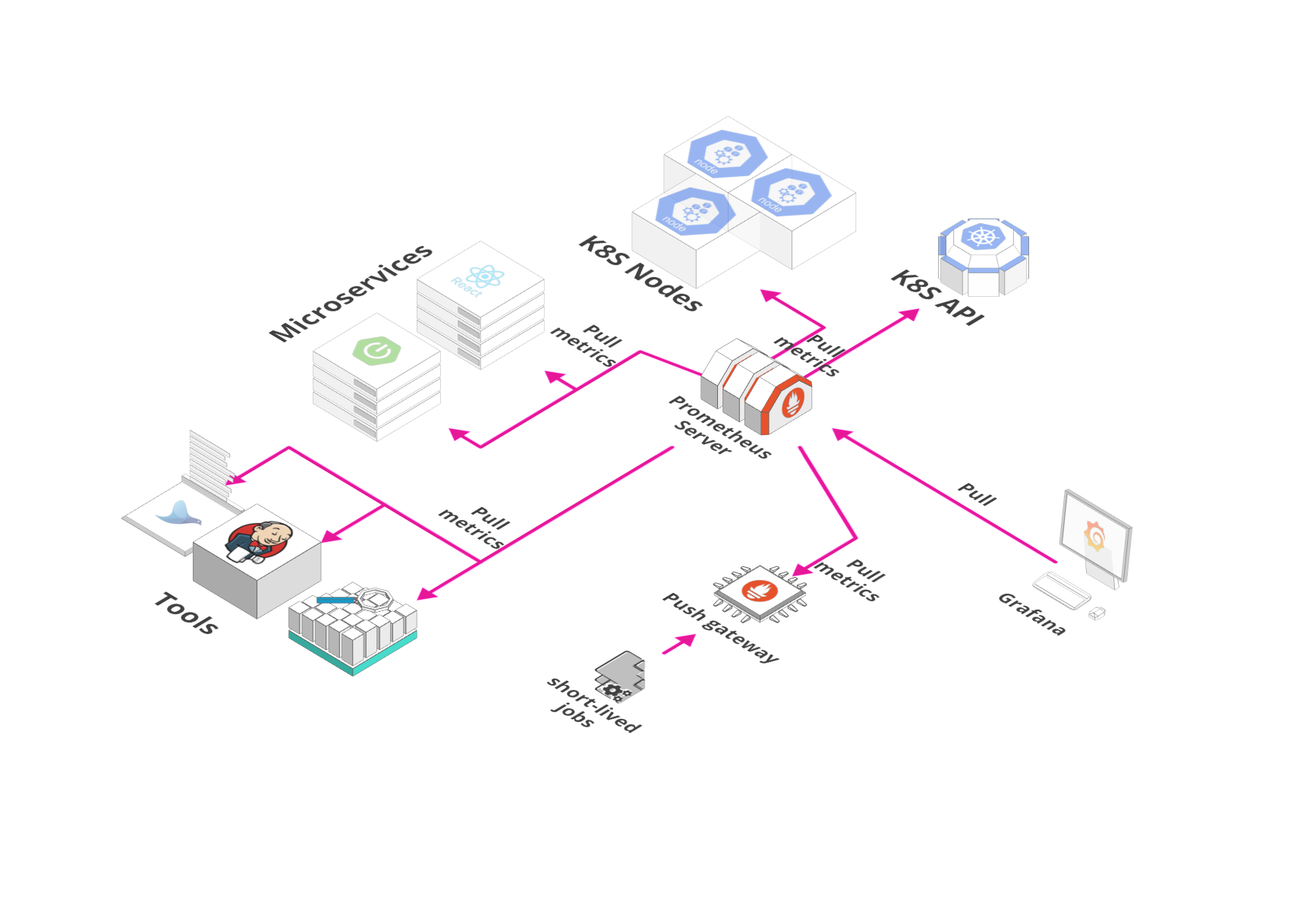

Monitoring Stack

Overview

Monitoring Stack includes tools required for the monitoring of applications and infrastructure.

We are using Prometheus Operator helm chart to deploy following monitoring components:

Prometheus: It is an opensource monitoring solution for metrics and alerting.

Service Monitors: CRD to generate prometheus configuration to monitor Kubernetes services.

AlertManager: It is part of Prometheus's alerting feature. Alert rules are defined in Prometheus server that send alerts to Alertmanager. Once alerts are received Alertmanager can group, inhibit or silence them.

Node Exporter: Prometheus exporter for hardware and OS metrics exposed by *NIX kernels, with pluggable metric collectors.

Kube State Metrics: It is a service that generates metrics about kubernetes objects by using Kubebernetes API server.

Grafana: Grafana is an open source metric analytics & visualization suite with support for Elasticsearch, Prometheus etc. Currently, it is being used with Prometheus.

Details

- PrometheusOperator in Openshift

Prometheus operator can be deployed in openshift using Openshift subscription. Create a file named prometheus-subscription.yaml with following content

apiVersion: operators.coreos.com/v1alpha1

kind: Subscription

metadata:

generateName: prometheus-

namespace: {NAMESPACE}

spec:

source: rh-operators

name: prometheus

startingCSV: prometheusoperator.0.22.2

channel: preview

Replace {NAMESPACE} with the name of namespace in which you want prometheus operator to be deployed.

Currently okd doesn't support prometheus operator to watch more than one namespace. If needed you can create a custom CSV of prometheus operator that uses the latest version of prometheus operator image and specify multiple namespaces to watch.

To get the current CSV, create a subscription for the prometheus operator and then go to k8s/all-namespaces/clusterserviceversions in the browser and select the subscription you created and copy its yaml file. Make the following changes to the CSV:

- Change version

- Remove fields like selflink, uuid etc.

- Update image of prometheus operator, minimum v0.29.0.

- Search the block that creates the prometheus operator container and update the

namespacearg and change it to'-namespaces=$(K8S_NAMESPACE),SECOND_NAMESPACE'(changeSECOND_NAMESPACEto the name of extra namespace that you want it to watch). You can add multiple namespaces as well by separating there names with,.

After changes your CSV should look something like this

apiVersion: operators.coreos.com/v1alpha1

kind: ClusterServiceVersion

metadata:

annotations:

alm-examples: >-

[{"apiVersion":"monitoring.coreos.com/v1","kind":"Prometheus","metadata":{"name":"example","labels":{"prometheus":"k8s"}},"spec":{"replicas":2,"version":"v2.3.2","serviceAccountName":"prometheus-k8s","securityContext":

{},

"serviceMonitorSelector":{"matchExpressions":[{"key":"k8s-app","operator":"Exists"}]},"ruleSelector":{"matchLabels":{"role":"prometheus-rulefiles","prometheus":"k8s"}},"alerting":{"alertmanagers":[{"namespace":"monitoring","name":"alertmanager-main","port":"web"}]}}},{"apiVersion":"monitoring.coreos.com/v1","kind":"ServiceMonitor","metadata":{"name":"example","labels":{"k8s-app":"prometheus"}},"spec":{"selector":{"matchLabels":{"k8s-app":"prometheus"}},"endpoints":[{"port":"web","interval":"30s"}]}},{"apiVersion":"monitoring.coreos.com/v1","kind":"Alertmanager","metadata":{"name":"alertmanager-main"},"spec":{"replicas":3,

"securityContext": {}}}]

labels:

alm-catalog: rh-operators

name: prometheusoperator.0.29.0

namespace: {NAMESPACE}

spec:

apiservicedefinitions: {}

customresourcedefinitions:

owned:

- description: A running Prometheus instance

displayName: Prometheus

kind: Prometheus

name: prometheuses.monitoring.coreos.com

resources:

- kind: StatefulSet

name: ''

version: v1beta2

- kind: Pod

name: ''

version: v1

specDescriptors:

- description: Desired number of Pods for the cluster

displayName: Size

path: replicas

x-descriptors:

- 'urn:alm:descriptor:com.tectonic.ui:podCount'

- description: A selector for the ConfigMaps from which to load rule files

displayName: Rule Config Map Selector

path: ruleSelector

x-descriptors:

- 'urn:alm:descriptor:com.tectonic.ui:selector:core:v1:ConfigMap'

- description: ServiceMonitors to be selected for target discovery

displayName: Service Monitor Selector

path: serviceMonitorSelector

x-descriptors:

- >-

urn:alm:descriptor:com.tectonic.ui:selector:monitoring.coreos.com:v1:ServiceMonitor

- description: The ServiceAccount to use to run the Prometheus pods

displayName: Service Account

path: serviceAccountName

x-descriptors:

- 'urn:alm:descriptor:io.kubernetes:ServiceAccount'

- description: >-

Limits describes the minimum/maximum amount of compute resources

required/allowed

displayName: Resource Requirements

path: resources

x-descriptors:

- 'urn:alm:descriptor:com.tectonic.ui:resourceRequirements'

version: v1

- description: >-

A Prometheus Rule configures groups of sequentially evaluated

recording and alerting rules.

displayName: Prometheus Rule

kind: PrometheusRule

name: prometheusrules.monitoring.coreos.com

version: v1

- description: Configures prometheus to monitor a particular k8s service

displayName: Service Monitor

kind: ServiceMonitor

name: servicemonitors.monitoring.coreos.com

resources:

- kind: Pod

name: ''

version: v1

specDescriptors:

- description: The label to use to retrieve the job name from

displayName: Job Label

path: jobLabel

x-descriptors:

- 'urn:alm:descriptor:com.tectonic.ui:label'

- description: A list of endpoints allowed as part of this ServiceMonitor

displayName: Endpoints

path: endpoints

x-descriptors:

- 'urn:alm:descriptor:com.tectonic.ui:endpointList'

version: v1

- description: Configures an Alertmanager for the namespace

displayName: Alertmanager

kind: Alertmanager

name: alertmanagers.monitoring.coreos.com

resources:

- kind: StatefulSet

name: ''

version: v1beta2

- kind: Pod

name: ''

version: v1

specDescriptors:

- description: Desired number of Pods for the cluster

displayName: Size

path: replicas

x-descriptors:

- 'urn:alm:descriptor:com.tectonic.ui:podCount'

- description: >-

Limits describes the minimum/maximum amount of compute resources

required/allowed

displayName: Resource Requirements

path: resources

x-descriptors:

- 'urn:alm:descriptor:com.tectonic.ui:resourceRequirements'

version: v1

description: >

The Prometheus Operator for Kubernetes provides easy monitoring definitions

for Kubernetes services and deployment and management of Prometheus

instances.

Once installed, the Prometheus Operator provides the following features:

* **Create/Destroy**: Easily launch a Prometheus instance for your

Kubernetes namespace, a specific application or team easily using the

Operator.

* **Simple Configuration**: Configure the fundamentals of Prometheus like

versions, persistence, retention policies, and replicas from a native

Kubernetes resource.

* **Target Services via Labels**: Automatically generate monitoring target

configurations based on familiar Kubernetes label queries; no need to learn

a Prometheus specific configuration language.

### Other Supported Features

**High availability**

Multiple instances are run across failure zones and data is replicated. This

keeps your monitoring available during an outage, when you need it most.

**Updates via automated operations**

New Prometheus versions are deployed using a rolling update with no

downtime, making it easy to stay up to date.

**Handles the dynamic nature of containers**

Alerting rules are attached to groups of containers instead of individual

instances, which is ideal for the highly dynamic nature of container

deployment.

displayName: Prometheus Operator

icon:

- base64data: >-

PHN2ZyB3aWR0aD0iMjQ5MCIgaGVpZ2h0PSIyNTAwIiB2aWV3Qm94PSIwIDAgMjU2IDI1NyIgeG1sbnM9Imh0dHA6Ly93d3cudzMub3JnLzIwMDAvc3ZnIiBwcmVzZXJ2ZUFzcGVjdFJhdGlvPSJ4TWlkWU1pZCI+PHBhdGggZD0iTTEyOC4wMDEuNjY3QzU3LjMxMS42NjcgMCA1Ny45NzEgMCAxMjguNjY0YzAgNzAuNjkgNTcuMzExIDEyNy45OTggMTI4LjAwMSAxMjcuOTk4UzI1NiAxOTkuMzU0IDI1NiAxMjguNjY0QzI1NiA1Ny45NyAxOTguNjg5LjY2NyAxMjguMDAxLjY2N3ptMCAyMzkuNTZjLTIwLjExMiAwLTM2LjQxOS0xMy40MzUtMzYuNDE5LTMwLjAwNGg3Mi44MzhjMCAxNi41NjYtMTYuMzA2IDMwLjAwNC0zNi40MTkgMzAuMDA0em02MC4xNTMtMzkuOTRINjcuODQyVjE3OC40N2gxMjAuMzE0djIxLjgxNmgtLjAwMnptLS40MzItMzMuMDQ1SDY4LjE4NWMtLjM5OC0uNDU4LS44MDQtLjkxLTEuMTg4LTEuMzc1LTEyLjMxNS0xNC45NTQtMTUuMjE2LTIyLjc2LTE4LjAzMi0zMC43MTYtLjA0OC0uMjYyIDE0LjkzMyAzLjA2IDI1LjU1NiA1LjQ1IDAgMCA1LjQ2NiAxLjI2NSAxMy40NTggMi43MjItNy42NzMtOC45OTQtMTIuMjMtMjAuNDI4LTEyLjIzLTMyLjExNiAwLTI1LjY1OCAxOS42OC00OC4wNzkgMTIuNTgtNjYuMjAxIDYuOTEuNTYyIDE0LjMgMTQuNTgzIDE0LjggMzYuNTA1IDcuMzQ2LTEwLjE1MiAxMC40Mi0yOC42OSAxMC40Mi00MC4wNTYgMC0xMS43NjkgNy43NTUtMjUuNDQgMTUuNTEyLTI1LjkwNy02LjkxNSAxMS4zOTYgMS43OSAyMS4xNjUgOS41MyA0NS40IDIuOTAyIDkuMTAzIDIuNTMyIDI0LjQyMyA0Ljc3MiAzNC4xMzguNzQ0LTIwLjE3OCA0LjIxMy00OS42MiAxNy4wMTQtNTkuNzg0LTUuNjQ3IDEyLjguODM2IDI4LjgxOCA1LjI3IDM2LjUxOCA3LjE1NCAxMi40MjQgMTEuNDkgMjEuODM2IDExLjQ5IDM5LjYzOCAwIDExLjkzNi00LjQwNyAyMy4xNzMtMTEuODQgMzEuOTU4IDguNDUyLTEuNTg2IDE0LjI4OS0zLjAxNiAxNC4yODktMy4wMTZsMjcuNDUtNS4zNTVjLjAwMi0uMDAyLTMuOTg3IDE2LjQwMS0xOS4zMTQgMzIuMTk3eiIgZmlsbD0iI0RBNEUzMSIvPjwvc3ZnPg==

mediatype: image/svg+xml

install:

spec:

deployments:

- name: prometheus-operator

spec:

replicas: 1

selector:

matchLabels:

k8s-app: prometheus-operator

template:

metadata:

labels:

k8s-app: prometheus-operator

spec:

containers:

- args:

- '-namespaces=$(K8S_NAMESPACE),{TARGET_NAMESPACE}'

- '-manage-crds=false'

- '-logtostderr=true'

- >-

--config-reloader-image=quay.io/coreos/configmap-reload:v0.0.1

- >-

--prometheus-config-reloader=quay.io/coreos/prometheus-config-reloader:v0.29.0

env:

- name: K8S_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: >-

quay.io/coreos/prometheus-operator@sha256:5abe9bdfd93ac22954e3281315637d9721d66539134e1c7ed4e97f13819e62f7

name: prometheus-operator

ports:

- containerPort: 8080

name: http

resources:

limits:

cpu: 200m

memory: 100Mi

requests:

cpu: 100m

memory: 50Mi

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

nodeSelector:

beta.kubernetes.io/os: linux

serviceAccount: prometheus-operator-0-29-0

permissions:

- rules:

- apiGroups:

- ''

resources:

- nodes

- services

- endpoints

- pods

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

verbs:

- get

serviceAccountName: prometheus-k8s

- rules:

- apiGroups:

- apiextensions.k8s.io

resources:

- customresourcedefinitions

verbs:

- '*'

- apiGroups:

- monitoring.coreos.com

resources:

- alertmanagers

- prometheuses

- prometheuses/finalizers

- alertmanagers/finalizers

- servicemonitors

- prometheusrules

verbs:

- '*'

- apiGroups:

- apps

resources:

- statefulsets

verbs:

- '*'

- apiGroups:

- ''

resources:

- configmaps

- secrets

verbs:

- '*'

- apiGroups:

- ''

resources:

- pods

verbs:

- list

- delete

- apiGroups:

- ''

resources:

- services

- endpoints

verbs:

- get

- create

- update

- apiGroups:

- ''

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- namespaces

verbs:

- list

- get

- watch

serviceAccountName: prometheus-operator-0-29-0

strategy: deployment

keywords:

- prometheus

- monitoring

- tsdb

- alerting

labels:

alm-owner-prometheus: prometheusoperator

alm-status-descriptors: prometheusoperator.0.29.0

links:

- name: Prometheus

url: 'https://www.prometheus.io/'

- name: Documentation

url: 'https://coreos.com/operators/prometheus/docs/latest/'

- name: Prometheus Operator

url: 'https://github.com/coreos/prometheus-operator'

maintainers:

- email: openshift-operators@redhat.com

name: Red Hat

maturity: beta

provider:

name: Red Hat

replaces: prometheusoperator.0.22.2

selector:

matchLabels:

alm-owner-prometheus: prometheusoperator

version: 0.29.0

Now you can simply create this new CSV by doing oc apply and use by referencing this new CSV in the subscription in the startingCSV field.

Also for each extra namespace added, you must give access to prometheus operator's service account in the extra namespace. The permissions needed are below

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: prometheus-{EXTRA_NAMESPACE}

namespace: {EXTRA_NAMESPACE}

rules:

- apiGroups:

- ''

resources:

- nodes

- services

- endpoints

- pods

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: prometheus-binding-{EXTRA_NAMESPACE}

namespace: {EXTRA_NAMESPACE}

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: prometheus-{EXTRA_NAMESPACE}

subjects:

- kind: ServiceAccount

name: prometheus-k8s

namespace: {NAMESPACE}

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: prometheus-operator-{EXTRA_NAMESPACE}

namespace: {EXTRA_NAMESPACE}

rules:

- apiGroups:

- apiextensions.k8s.io

resources:

- customresourcedefinitions

verbs:

- '*'

- apiGroups:

- monitoring.coreos.com

resources:

- alertmanagers

- prometheuses

- prometheuses/finalizers

- alertmanagers/finalizers

- servicemonitors

- prometheusrules

verbs:

- '*'

- apiGroups:

- apps

resources:

- statefulsets

verbs:

- '*'

- apiGroups:

- ''

resources:

- configmaps

- secrets

verbs:

- '*'

- apiGroups:

- ''

resources:

- pods

verbs:

- list

- delete

- apiGroups:

- ''

resources:

- services

- endpoints

verbs:

- get

- create

- update

- apiGroups:

- ''

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- namespaces

verbs:

- list

- get

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: prometheus-operator-binding-{EXTRA_NAMESPACE}

namespace: {EXTRA_NAMESPACE}

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: prometheus-operator-{EXTRA_NAMESPACE}

subjects:

- kind: ServiceAccount

name: prometheus-operator-0-29-0

namespace: {NAMESPACE}

Replace {NAMESPACE} and {EXTRA_NAMESPACE} with appropriate values. In case of multiple namespaces, create one copy of above manifest for each extra namespace. The name of the service account should match the name provided in CSV above.

- Prometheus in Openshift

To create prometheus in openshift, Create a file named prometheus.yaml with the following content

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

prometheus: k8s

name: prometheus-dev

namespace: {NAMESPACE}

spec:

alerting:

alertmanagers:

- name: alertmanager-operated

namespace: {NAMESPACE}

port: web

replicas: 1

ruleSelector:

matchLabels:

prometheus: k8s

role: prometheus-rulefiles

securityContext: {}

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector:

any: true

serviceMonitorSelector:

matchLabels:

app: "common-service"

version: v2.3.2

---

apiVersion: route.openshift.io/v1

kind: Route

metadata:

name: prometheus-operated

namespace: {NAMESPACE}

spec:

port:

targetPort: web

tls:

insecureEdgeTerminationPolicy: Redirect

termination: edge

to:

kind: Service

name: prometheus-operated

weight: 100

wildcardPolicy: None

Replace {NAMESPACE} with the name of namespace in which you want prometheus to be deployed. It will try to connect with alert manager service alertmanager-operated on port web.

- AlertManager in Openshift

To create alert manager in openshift:

Create a file named alertManagerConfig.tmpl.yaml with the following content

global: resolve_timeout: 5m route: group_by: - alertname - cluster - service group_wait: 30s group_interval: 5m repeat_interval: 1h receiver: alerts-null routes: - match: alertname: WatchDog receiver: alerts-null - receiver: alerts inhibit_rules: - source_match: severity: critical target_match: severity: warning equal: - alertname - cluster - service receivers: - name: alerts slack_configs: - api_url: "{SLACK_WEBHOOK_URL}" channel: "#alerts" title: '[{{ .Status | toUpper }}{{ if eq .Status "firing" }}:{{ .Alerts.Firing | len }}{{ end }}] Prometheus Event Notification' title_link: |2 https://alertmanager-operated-{NAMESPACE}.kubehealth.com text: |2- {{ range .Alerts }} *Alert:* {{ .Annotations.summary }} - `{{ .Labels.severity }}` *Description:* {{ .Annotations.description }} *Graph:* <{{ .GeneratorURL }}|:chart_with_upwards_trend:> *Runbook:* <{{ .Annotations.runbook }}|:spiral_note_pad:> *Details:* {{ range .Labels.SortedPairs }} *{{ .Name }}:* `{{ .Value }}` {{ end }} {{ end }} send_resolved: true - name: alerts-nullFeel free to modify the config to suite your needs. This sample config sends all alerts except

WatchDogto slack via webhook. Do replace{SLACK_WEBHOOK_URL}with the link of your webhook.Next create a file named

alertManager.tmpl.yamlwith the following contentapiVersion: monitoring.coreos.com/v1 kind: Alertmanager metadata: name: alertmanager-dev namespace: {NAMESPACE} spec: replicas: 1 securityContext: {} --- apiVersion: v1 kind: Secret type: Opaque metadata: labels: k8s-app: alertmanager name: alertmanager-alertmanager-dev namespace: {NAMESPACE} data: alertmanager.yaml: >- {CONFIG_BASE64} --- apiVersion: route.openshift.io/v1 kind: Route metadata: name: alertmanager-operated namespace: {NAMESPACE} spec: port: targetPort: web tls: insecureEdgeTerminationPolicy: Redirect termination: edge to: kind: Service name: alertmanager-operated weight: 100 wildcardPolicy: NoneNow execute

NAMESPACE="monitoring" REPLACE_NAMESPACE="s/{NAMESPACE}/$NAMESPACE/g" REPLACE_CONFIG="s/{CONFIG_BASE64}/$(sed $REPLACE_NAMESPACE alertManagerConfig.tmpl.yaml | base64 -w 0)/g" sed ${REPLACE_NAMESPACE} alertManager.tmpl.yaml | sed ${REPLACE_CONFIG} | oc apply --namespace ${NAMESPACE} -f -

- Grafana in Openshift

To deploy grafana in openshift using helm, just use the following grafana values in a file e.g grafana-values.yaml

sidecar:

dashboards:

enabled: true

adminPassword: "adminPassword"

rbac:

create: true

pspEnabled: false

# it will

namespaced: true

securityContext:

runAsUser: 1000260001

fsGroup:

runAsNonRoot: true

datasources:

datasources.yaml:

apiVersion: 1

datasources:

- name: Prometheus

type: prometheus

url: http://prometheus-operated:9090

access: proxy

isDefault: true

After replacing, the prometheus URL and your custom password in the grafana-values.yaml, run

RELEASE="grafana"

NAMESPACE="monitoring"

helm upgrade --install $RELEASE --namespace $NAMESPACE stable/grafana -f grafana-values.yaml --force

Helm chart cannot create the route at the moment so if needed you can create it by saving the following manifest in a file named route.yaml.

apiVersion: route.openshift.io/v1

kind: Route

metadata:

labels:

app: grafana

chart: grafana-3.3.8

heritage: Tiller

name: grafana

namespace: {NAMESPACE}

spec:

port:

targetPort: service

tls:

insecureEdgeTerminationPolicy: Redirect

termination: edge

to:

kind: Service

name: {GRAFANA_SERVICE}

weight: 100

wildcardPolicy: None

Replace NAMESPACE and GRAFANA_SERVICE (the name of grafana helm release) with correct values and then run

oc apply -f route.yaml

- Monitoring in Business Application

Updating buisness app

Spring

Follow the steps below to expose monitoring metrics of a spring boot application at /actuator/prometheus.

Add Dependencies

The following dependencies are needed to expose micrometer and application metrics

<dependencies>

<!-- For micrometer support -->

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-core</artifactId>

<version>1.1.4</version>

</dependency>

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-registry-prometheus</artifactId>

<version>1.1.4</version>

</dependency>

</dependencies>

Update Configuration

Add the following properties to application.properties to expose the micrometer endpoint.

management.endpoint.metrics.enabled=true

management.endpoints.web.exposure.include=*

management.endpoint.prometheus.enabled=true

management.metrics.export.prometheus.enabled=true

Add micrometer registry

Add the MeterRegistry bean to your spring boot application by adding the follwoing snippet to your SpringBootApplication class.

@Bean

MeterRegistryCustomizer<MeterRegistry> metricsCommonTags() {

return registry -> registry.config().commonTags("application", "common-service");

}

This will help you create custom metrics within the application

Use Counter

To count the number of times an operation has been performed, just create a io.micrometer.core.instrument.Counter variable by doing

Counter.builder("count_metric_name").description("Description of metric").register(meterRegistry);

the Counter class exposes a simple increment method that can be used to count.

Time Measurement

To add metrics that keeps track of processing time taken by a piece of code, follow the following snippet:

private final Timer timer = Timer.builder("metricsname").tag("tagKey", "tagValue").register(meterRegistry);

long start = System.nanoTime();

...your code here

timer.record(System.nanoTime() - start, TimeUnit.NANOSECONDS);

Adding Service Monitor

You need to add a service monitor so that prometheus knows where your app is running and where it should scrap for metrics. To create a service monitor, create a file named service-monitor.yaml with following content:

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

app: {APP_NAME}-monitor

name: {APP_NAME}-monitor

namespace: {NAMESPACE}

spec:

endpoints:

- interval: 30s

path: /actuator/prometheus

port: web

namespaceSelector:

matchNames:

- "{TARGET_NAMESPACE}"

selector:

matchLabels:

app: {APP_NAME}

Replace {APP_NAME}, {NAMESPACE} and {TARGET_NAMESPACE} with correct values in the above file. Here {NAMESPACE} is the namespace in which prometheus is running and {TARGET_NAMESPACE} is the namespace in which your app is running. Also, your pods should have a label app: {APP_NAME}. If it doesn't have it then update the selector in the above file according to your scenario.

Apply the above manifest to create service monitor using the command below:

oc apply -f service-monitor.yaml

Adding Prometheus Rule

Prometheus can be configured to trigger alerts based on metrics using Prometheus rule. To create alerts for your app create a file named prometheus-rule.yaml with following content

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

labels:

prometheus: k8s

role: prometheus-rulefiles

name: prometheus-rules-{NAMESPACE}

namespace: {NAMESPACE}

spec:

groups:

- name: application.rules

rules:

- alert: HighEmailUsage

annotations:

message: 'Email usage is greater than 10.'

expr: count_requests_total > 10

for: 10s

labels:

severity: warning

Replace {NAMESPACE} with the name of namespace in which prometheus is deployed. This sample config generates an alert when your buisness application metric count_requests_total meets the criteria count_requests_total > 10. This can be changed based on your scenario and more alerts can be added as new array elements.

- Creating Hooks in Slack

Configure through an App

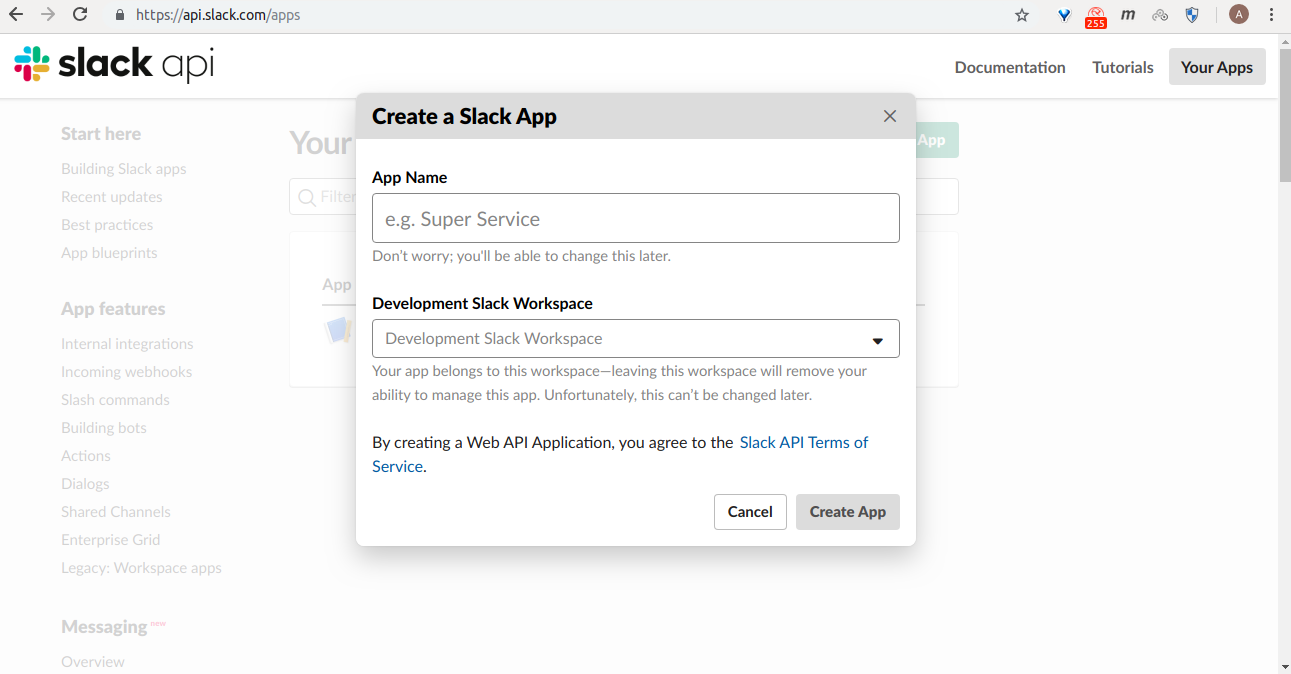

You will have to select an app for creating hooks, if you dont have one, you would have to create one.

If you dont have one already

Go to https://api.slack.com/apps and click create new app.

Pick a name, choose a workspace to install your app to, and then click Create App.

If you already have an app

Go to https://api.slack.com/apps and select the app from the list that you want to configure.

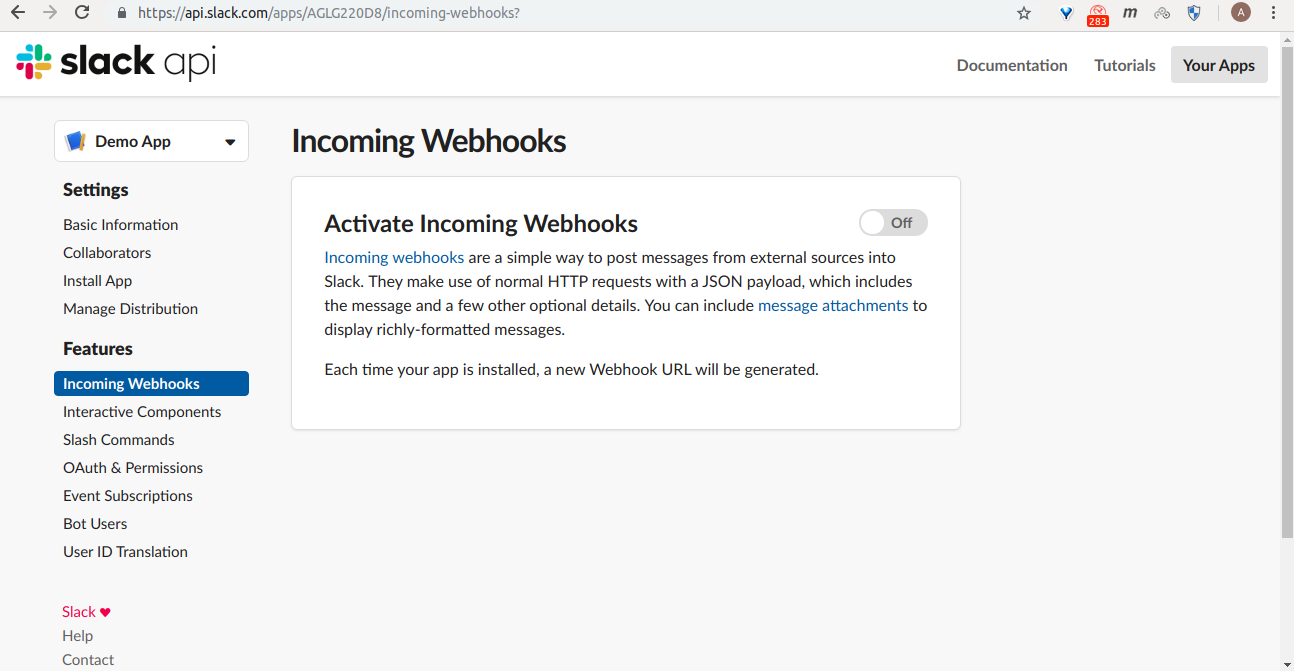

Activate Webhooks

Selecting your app, will take you to Settings of the app, then go to Incoming Webhooks from the left side.

From here, click the Activate Incoming Webhooks toggle to switch it on

If you want to create a hook for a slack channel and add an app to post in that channel, follow that steps

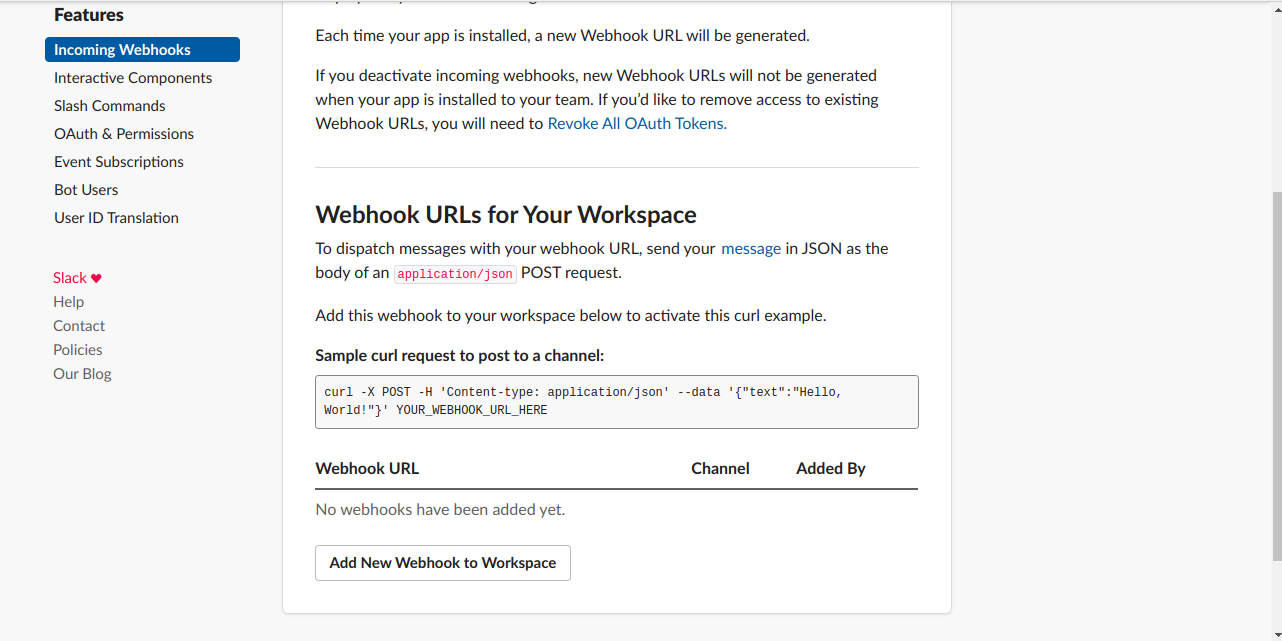

Add Webhook To Workspace

Now that Incoming Webhooks are enabled, the settings page should refresh and some extra options will appear below. One of those options will be a really helpful button marked Add New Webhook to Workspace, and you should click it.

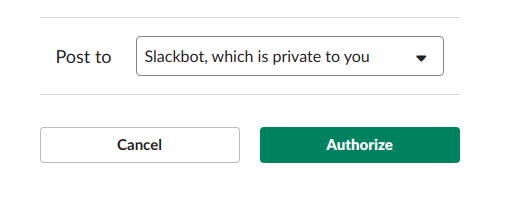

So go ahead and pick a channel that the app will post to, and then click to Authorize your app. You'll be sent back to your app settings, and you should now see a new entry under the Webhook URLs for Your Workspace section.

So go ahead and pick a channel that the app will post to, and then click to Authorize your app. You'll be sent back to your app settings, and you should now see a new entry under the Webhook URLs for Your Workspace section. Use this slack hook to post messages to that channel.

- Configure a Custom Integration

Go to https://<your-workspace>.slack.com/apps/manage, go to Custom Integrations. Select the Incoming Webhooks, and Select Add Configuration.

After this, you will be redirected and there will be an option to select a channel or you can even create a new channel from there.

And then click Add Incoming Webhooks Integration.

This will give you the webhook URL.

Tools Matrix

| Tool | Chart Repository | Cherry Pickable | SSO | Pre-Requisites |

|---|---|---|---|---|

| Prometheus Operator | Public | Yes | N/A | None |

| Metrics server | Public | Yes | N/A | None |

Default Configurations

Following are the default configurations that are used by StakaterPlatform for Monitoring stack. These configurations can be found under configs/ directory in the StakaterPlatform Github repository

AlertManager

Alertmanager generates alerts on the basis of prometheus rules. These alerts can be sent over to Slack for better alerting workflow.

Configure the following variables in variables.config to send alerts to Slack channels.

| Variables | Description | Default |

|---|---|---|

| SLACK_INFRA_ALERTS_WEBHOOK_URL | Slack channel webhook URL to send Alertmanager k8s infrastructure alerts | OPTIONAL |

| SLACK_INFRA_ALERTS_CHANNEL | Slack channel name to send Alertmanager k8s infrastructure alerts | #stakater-platform-infra-alerts |

| SLACK_APPS_ALERTS_WEBHOOK_URL | Slack channel name to send Alertmanager application alerts | OPTIONAL |

| SLACK_APPS_ALERTS_CHANNEL | Slack channel webhook URL to send Alertmanager application alerts | #stakater-platform-apps-alerts |

configs/alertmanager.yaml

# For more details: https://github.com/prometheus/alertmanager

global:

resolve_timeout: 5m

inhibit_rules:

- target_match:

alertname: 'CPUThrottlingHigh'

source_match:

alertname: 'Watchdog'

equal: ['prometheus']

receivers:

- name: alerts-null

- name: default-infra-alerts

slack_configs:

- api_url: SLACK_INFRA_ALERTS_WEBHOOK_URL

channel: '#SLACK_INFRA_ALERTS_CHANNEL'

send_resolved: true

text: |2-

{{ range .Alerts }}

*Alert:* {{ .Annotations.summary }} - `{{ .Labels.severity }}`

*Description:* {{ .Annotations.description }}

*Graph:* <{{ .GeneratorURL }}|:chart_with_upwards_trend:> *Runbook:* <{{ .Annotations.runbook }}|:spiral_note_pad:>

*Details:*

{{ range .Labels.SortedPairs }} *{{ .Name }}:* `{{ .Value }}`

{{ end }}

{{ end }}

title: '[{{ .Status | toUpper }}{{ if eq .Status "firing" }}:{{ .Alerts.Firing | len }}{{ end }}] Prometheus Event Notification'

title_link: |2

https://alertmanager-monitoring.DOMAIN

- name: apps-alerts

slack_configs:

- api_url: SLACK_APPS_ALERTS_WEBHOOK_URL

channel: '#SLACK_APPS_ALERTS_CHANNEL'

send_resolved: true

text: |2-

{{ range .Alerts }}

*Alert:* {{ .Annotations.summary }} - `{{ .Labels.severity }}`

*Description:* {{ .Annotations.description }}

*Graph:* <{{ .GeneratorURL }}|:chart_with_upwards_trend:> *Runbook:* <{{ .Annotations.runbook }}|:spiral_note_pad:>

*Details:*

{{ range .Labels.SortedPairs }} *{{ .Name }}:* `{{ .Value }}`

{{ end }}

{{ end }}

title: '[{{ .Status | toUpper }}{{ if eq .Status "firing" }}:{{ .Alerts.Firing| len }}{{ end }}] Prometheus Event Notification'

title_link: |2

https://alertmanager-monitoring.DOMAIN

route:

group_by:

- alertname

- cluster

- service

group_interval: 5m

group_wait: 30s

repeat_interval: 1h

receiver: default-infra-alerts

routes:

- match:

kind: apps

receiver: apps-alerts

- match:

alertname: Watchdog

receiver: alerts-null

Metrics-server is a cluster level component that uses Summary API to scrape metrics from all nodes & pods served by kubelet. It allows us to query resource metrics using kubectl utility.

Storage Details

| Tool | PVC | Recommended Space | | :-------------------: | :------------------------------------------------------------------------------: | :--------------😐 :--😐 :-------------😐 | Prometheus Operator | prometheus-stakater-prometheus-db-prometheus-stakater-prometheus-0 | 6Gi |

Security Stack

Overview

A DevOps team may be accessing multiple applications and tools in a single product environment in support of their DevOps processes such as CI/CD server, Centralized log, Kubernetes dashboard, Monitoring software, Artifact repositories, Admin tools, etc. All of these tools will require authentication mechanisms for security purposes, and for a user to maintain and remember their authentication credentials on so many softwares can quickly become cumbersome. And in the event of lost credentials, it can be a tedious process for both user and admins to recover the required credentials.

Instead of having individual authentication on various tools, a more effective strategy is to use single sign-on for all tools, i.e. a centralized authentication mechanism that can allow or reject access to a set of tools based on a single set of credentials per user. Additionally some tools may not have authentication built into them at all, and may be reliant on an external authentication server in any case. An external authentication server with single sign-on capability can therefore prove to be the way to go in such a situation.

- Single Sign-On

Single Sign-On (SSO) allows users to log in using a single set of credentials, e.g. username and password, so they can easily access a set of applications. SSO. SSO saves time and energy for users because they do not have to repeatedly log into multiple applications. This provides a smooth user experience, and makes it less likely to have access problems because of lost or forgotten credentials, locked out accounts, etc.

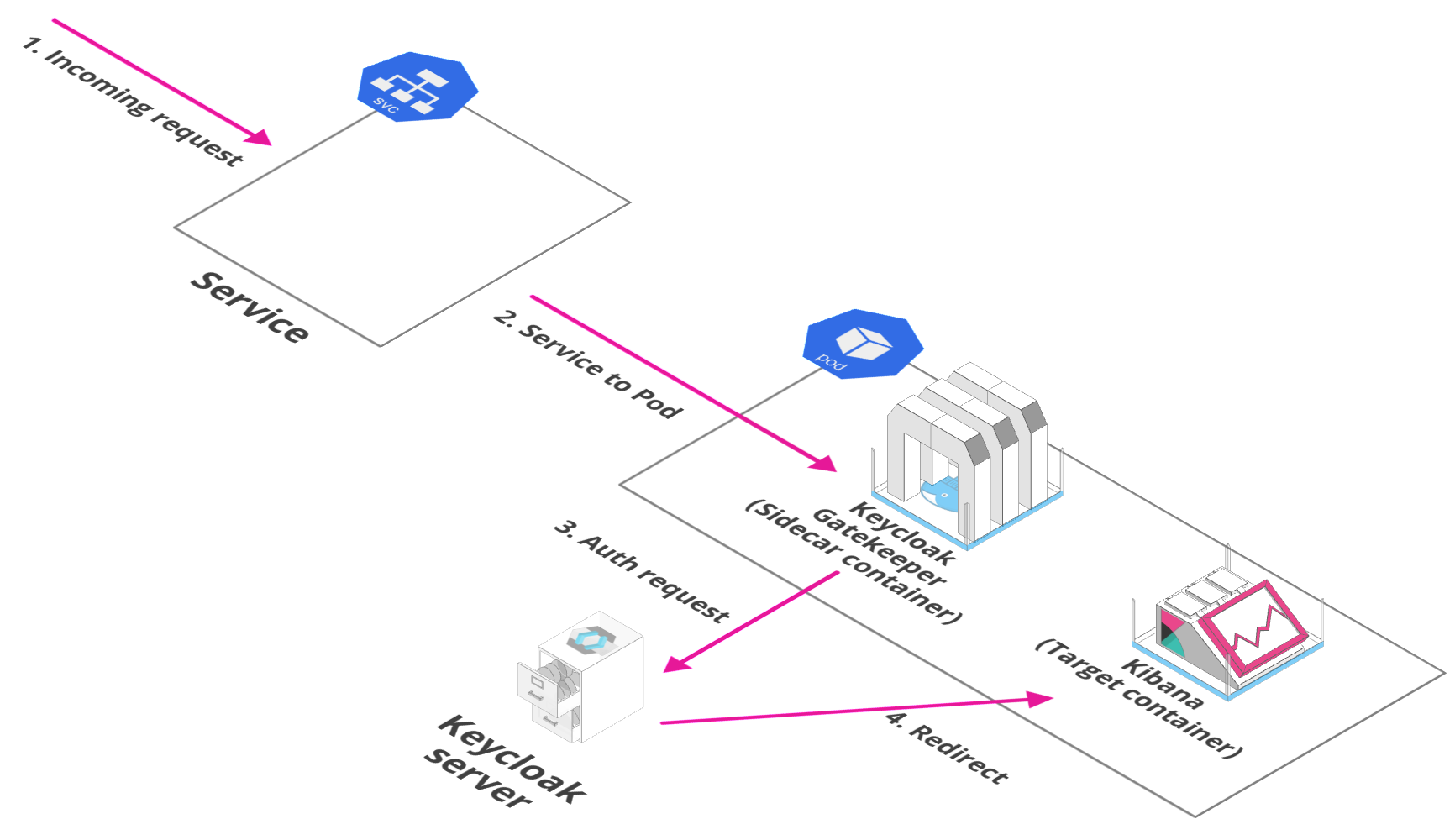

- OpenID Connect

OIDC uses the Json Web Token (JWT) set of standards. There are really two types of use cases when using OIDC. Our relevant use case is where the application asks the Keycloak server to authenticate a user for them. After a successful login, the application will receive an identity token and an access token. The identity token contains information about the user such as username, email, and other profile information. The access token is digitally signed by the realm and contains access information, e.g. user roles, that the application can use to determine what resources the user is allowed to access on the application.

Tools Introduction

- Keyclaok

Keycloak is an Open Source Identity and Access Management solution. It provides an easy way to add authentication including Single Sign-on to applications and services with minimum effort. Keycloak handles persistence and user authentication all out of the box.

Instead of having to login to individual applications, users authenticate with Keycloak rather than individual applications. This means that the individual applications don’t have to implement their own login forms, authentication, and storage of users and sessions. Once logged-in to Keycloak, users don’t have to login again to access a different application. And similarly once logged-out from Keycloak, users don’t have to log out of individual applications. Enabling login with social networks is also easy. The configuration for these can be added via Keycloak’s admin console. No code or changes are required to individual applications.

Keycloak supports OpenID Connect and SAML protocols. OpenID Connect (OIDC) is an extension of the OAuth 2 authentication protocol. While OAuth 2.0 is a framework for building authorization protocols, and OIDC is the full implementation of a authentication and authorization protocol. SAML 2.0 is similar to OIDC but a lot older and consequently more mature. It has its roots in SOAP and works by exchanging XML documents between the authentication server and the application, so it tends to be a bit more verbose than OIDC. In most cases OIDC is recommended by Keycloak.

Setup

A stable chart for keycloak is available in the official public helm chart repository. The chart deploys a StatefulSet for Keycloak and also includes a deployment of PostgreSQL which it uses as the database for Keycloak. The chart can also be configured to use an external instance of PostgreSQL instead of the bundled deployment, such as AWS RDS.

Going by the philosophy of GitOps and maintaining all our configuration in code, we use a json file to configure our keycloak realm and import it into keycloak at deployment time. Following is an example configuration of the Keycloak chart. While the standalone operating mode is not recommended for a production setup as it becomes a single point of failure for applications, the example is show for a standalone operating mode to keep things simple while introducing the rest of the required configuration. The realm json is loaded into a configmap and then referenced in the Keycloak chart through the configmap.

replicas: 1

image:

repository: jboss/keycloak

tag: 4.8.3.Final

pullPolicy: IfNotPresent

persistence:

# If true, the Postgres chart is deployed

deployPostgres: false

# The database vendor. Can be either "postgres", "mysql", "mariadb", or "h2"

dbVendor: postgres

## The following values only apply if "deployPostgres" is set to "false"

# Specifies an existing secret to be used for the database password

existingSecret: "keycloak-secrets"

# The key in the existing secret that stores the password

existingSecretKey: database.password

dbName: kc-database

dbHost: keycloak-postgresql.rds

dbPort: 5432

dbUser: keycloak

extraArgs: >

-Djgroups.bind_addr=global

-Dkeycloak.migration.action=import

-Dkeycloak.migration.provider=singleFile

-Dkeycloak.migration.file=/opt/jboss/keycloak/standalone/configuration/import/realm.json

-Dkeycloak.migration.strategy=IGNORE_EXISTING

## Allows the specification of additional environment variables for Keycloak

extraEnv: |

- name: OPERATING_MODE

value: standalone

## Add additional volumes and mounts, e. g. for custom themes

extraVolumes: |

- name: keycloak-config

configMap:

name: keycloak-configmap

items:

- key: realm.json

path: realm.json

extraVolumeMounts: |

- name: keycloak-config

mountPath: /opt/jboss/keycloak/standalone/configuration/import/realm.json

subPath: realm.json

podAnnotations:

fluentdConfiguration: >

[

{

"containers":

[

{

"expression": "/^\\S*\\s-\\s-\\s\\[(?<time>\\S*)[\\S\\s]*\\]\\s(?<message>[\\S\\s]*)/",

"expressionFirstLine": "/^\\:\\:f{4}:[0-9]+.[0-9]+\\.[0-9]+\\.[0-9]+/",

"timeFormat": "%d/%b/%Y:%H:%M:%S",

"containerName": "keycloak"

}

]

}

]

service:

labels:

expose: "true"

port: 80

annotations:

xposer.stakater.com/annotations: |-

kubernetes.io/ingress.class: external-ingress

ingress.kubernetes.io/force-ssl-redirect: true

forecastle.stakater.com/expose: true

forecastle.stakater.com/icon: https://www.keycloak.org/resources/images/keycloak_logo_480x108.png

forecastle.stakater.com/appName: keycloak

exposeIngressUrl: globally

config.xposer.stakater.com/Domain: company.com

config.xposer.stakater.com/IngressNameTemplate: '{{.Service}}'

config.xposer.stakater.com/IngressURLTemplate: 'keycloak.{{.Namespace}}.{{.Domain}}'

The postgresql details are provided in the persistence section including the server details, database username and password (referenced from a kubernetes secret).

The realm json is loaded from the configmap into a volume, under extraVolumeMounts, which is mounted in Keycloak. In the extraArgs of the Keycloak container we specify the path of the mounted realm json which we want to import.

keycloak-configmap:

ConfigMapName: "keycloak-configmap"

Data:

realm.json: |-

{

"realm": "stakater",

"enabled": true,

"loginTheme": "keycloak",

"sslRequired": "external",

...

}

The operating mode is specified with the environment variables under extraEnv of the container.

It is necessary to create or obtain a client configuration for any application to be able to use Keycloak. You usually configure a new client for each new application hosted on a unique host name. When an application interacts with Keycloak, the application identifies itself with a client ID so Keycloak can provide a login page, single sign-on (SSO) session management, and other services.

The traditional method in the OAuth2 specification for authentication is to use a client id and secret. The client has a secret, which needs to be known to both the application and the Keycloak server. You can generate the secret for a particular client in the Keycloak administration console or then specify the secret in the keycloak realm json.

Note: It's recommended to whitelist keycloak URLs containing the slugs /auth/admin and /auth/realms/master/protocol/openid-connect. These URLs should only be accessible to the admins. We use nginx to restrict access nginx-restrict-service-access

KeyCloak with Identity Providers

Follow the following steps to harden the KeyCloak authentication by using Open ID Connect (OIDC) plugin to autheticate users via authetication service.

GoogleOpen https://console.developers.google.com and select your project.

Open credentials tab from lab navigation bar

Open

OAuth consent screentab and select Application type, give application name and add your authorized domain. In our case, it isstakater.comOpen

Credentialstab and createOAuth 2.0 client IDs. Give yourAuthorized redirect URIs. In stakater's case it is https://keycloak.tools.stakater.com/auth/realms/stakater/broker/google/endpoint Here is how to build this url- https://keycloak.tools.stakater.com is your external url for keycloak.

stakateris your realm name.

Enable api for

google+by searching it from top search bar in google console.Add

googleauthentication provider in KeyCloak using this linkAfter performing these steps, update the

clientIdandclientSecretwith newly createdOAuth 2.0 client IDsof your google identity provider in keycloak.If you face

Invalid parameter: redirect_uriorURI mismatchwhen authenticating with google, please make sure- You have correct

clientIdandclientSecretof google idp. - Your keycloak client has

Valid Redirect URIs. It must contain the internal endpoint keycloak service. - Your

Authorized redirect URIsin google console has valid uris.

- You have correct

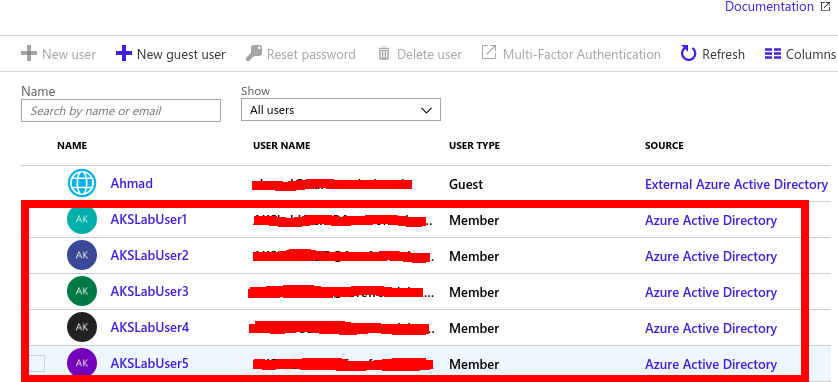

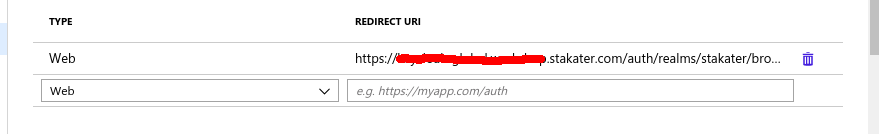

Azure Active Directory

Follow this tutorial if you want to deploy Azure Active directory with Azure Kubernetes Service AKS

- Create Users in Azure using the Administrator account using this. The new Users will be shown as source Azure Active Directory as below

Go to your Azure AD instance, select App Registrations on the left panel and click Endpoints on the top panel displayed. Copy values for OAuth 2.0 authorization endpoint (v2) and OAuth 2.0 token endpoint (v2) values. these will be used in the Keycloak configurations.

Go to your Azure AD instance, select App Registrations on the left panel and Select Azure AD Client Application created when Azure Active directory was created. Copy the Application (client) ID. Click Certificates and Secrets from the left panel and click New Client Secret button and create a new secret. Copy the value of the secret.

After the users are created open Keycloak administrative console and Select Identity Providers in the left panel.

Click on the Add Provider drop down and select OpenID Connect v1.0

Fill in the Options as below:

- alias: azure-ad

- Display Name: Microsoft Azure AD

- First login flow: first broker login

- Authorization URL: (OAuth 2.0 authorization endpoint (v2))

- Token URL: (OAuth 2.0 token endpoint (v2))

- Client ID: (Application (client) ID) from step 3

- Client secret: client secret from step 3

- Prompt: unspecified

Click Save to create the OIDC type Identity Provider.